Don't Let Your Robot Vision Get Fooled and Fail!

Posted on Sep 26, 2019 11:02 AM. 6 min read time

Who says only humans suffer from optical illusions? A robot vision system was recently fooled by a team of engineers. And your vision system can be fooled too! Here's how the engineers did it and how you can avoid it.

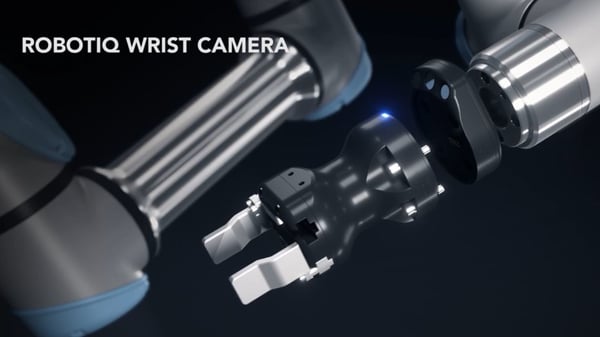

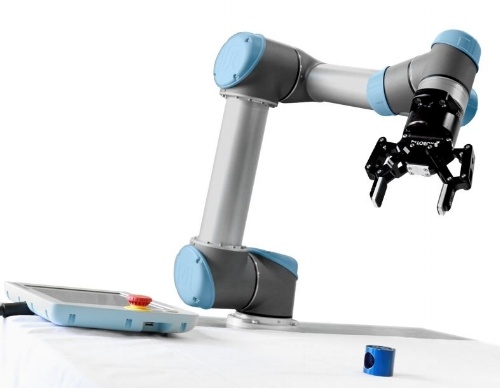

The fastest and easiest camera to use on Universal Robots.

The fastest and easiest camera to use on Universal Robots.

Recently, a team of engineers at the Southwest Research Institute (SWRI) announced that they've found a way to trick object detection algorithms. They can make the algorithms detect objects that aren't present, or hide objects which are present.

This is obviously a concern for makers of security-critical vision applications, such as self-driving cars, intruder detection, and facial recognition. A vision system that can be tricked (either unintentionally or on purpose) could compromise the security of computer vision systems.

The news is also relevant to those of us who use robot vision for more industrial applications. Even though our robots aren't driving at high speed down a busy motorway — as with self-driving cars — it's important for us to remember that robot vision systems are not perfect.

We need to remember that all vision systems can be fooled by particular situations and objects. We also need to know how to avoid fooling our systems unintentionally.

How the SWRI engineers fooled their vision system

The vision system which the SWRI engineers were using was one based on "deep-learning." This is a type of machine learning algorithm which uses layers of artificial neural networks to "teach itself." This type of vision algorithm can learn to recognize many different objects (e.g. people, cars, bicycles) by using a large set of example images.

For more information on deep learning see our previous article about how self-learning robots are coming to industry.

The SWRI engineers fooled their vision system by creating a set of specially designed images which exploited vulnerabilities in the deep learning algorithm. These images basically act like optical illusions. When the images are present, the algorithm either mistakenly detects a non-existent object or it fails to detect objects which are present in the image. For example, one image made the algorithm wrongly detect a SUV car as a person.

Newsflash: All vision can be fooled

You might be thinking: How can a computer vision system be fooled?

Aren't optical illusions caused by the human eyes and brain?

Surely robot vision is more robust?

The human vision system is notoriously easy to fool. You just need to look at any one of hundreds of optical illusions to see that our vision system has many "security flaws" — or, at least, that's what you'd call these flaws in robot vision systems. For us, we think of optical illusions as "just a bit of fun." Optical illusions are so popular, there are even museums devoted to them around the world.

Why do optical illusions occur? You might know already that they happen in the human visual system because the brain tries to make sense of some visual stimulus that doesn't make logical sense. Really, they are caused by the brain's imaginative interpretation of what it sees.

Robot vision, you might think, is much more objective than our human visual system?

Surely a robot can only see what is really there? Robots don't have an "imagination."

However, in reality any vision system can be fooled.

As the engineers at SWRI showed, a computer vision algorithm can be made to see things that aren't there by feeding it images which exploit the algorithm's flaws. The images that the engineers generated looked nothing like the objects which the algorithm mistakenly detected in them. They just fooled the algorithm into seeing something that wasn't there, which is is exactly what's happening when we see a chicken in this photo instead of just a church.

Why robot vision gets tricked

Even vision systems which are not based on deep-learning can be fooled. Any robot vision system can be tricked by the wrong conditions.

There are two main parts of the vision system that cause this trickery to happen:

- The Sensor — Problems like bad lighting and positioning can be caused by the vision sensor itself. All sensors have their limitations. The sensor can be fooled if the captured image contains information that falls outside the sensor's capabilities.

- The Algorithms — Problems like object deformation and scale issues can be caused by the algorithm itself. More robust detection algorithms can account for changes in the objects and images, but these may be able to be fooled in other ways.

There is no vision system that is completely safe from being fooled, as the engineers from SWRI showed with their experiment. Even if someone develops a very robust vision system, it seems likely that it will still be possible to find security flaws which can be exploited.

How to avoid your robot vision being tricked

For most robot vision applications — e.g. with cobot vision — you're not going to use algorithms with deep learning. As a result, the factors which cause bad detection are generally more mundane than those specially designed "optical illusion" images used by the engineers.

There are various "usual suspects" which can negatively affect your object detection including: bad lighting, deformed objects, challenging backgrounds, etc.

Here are two things you can do to avoid fooling your robot vision system:

- Remove challenging factors in your robot setup — Go through the list of the Top 10 Challenges for Robot Vision and try to reduce as many of them as possible in your setup. If bad lighting is causing a problem, change the lighting.

- Aim to make things easy for the system — Where possible, don't try to solve issues within the robot program itself. It's much easier to make things easier for the vision system by improving the physical setup. For example, if you're using shiny objects use one of the 10 solutions to improve detection of shiny objects. If you're struggling in the teaching phase, use this unusual trick. If you're doing bin picking, choose the easy way instead of the hard way.

Fooling robot vision systems is going to be an increasingly important issue for those security-critical applications, as the SWRI engineers showed. In the future, there will have to be a lot of work to overcome these security flaws.

But, for most of us, we just want a robot setup which works well for our robot application, without being fooled.

And that's much easier to achieve.

Which optical illusions have caused you the most trouble, either for yourself or for your robot? Tell us in the comments below or join the discussion on LinkedIn, Twitter, Facebook or the DoF professional robotics community.

Leave a comment