Research Spotlight: Stable Grasping of Unknown Objects

Posted on May 10, 2016 7:00 AM. 6 min read time

How can you reliably grasp unknown objects and ensure that the grasp is stable? In this post we discuss a new research paper from KTH in Sweden. The researchers used a Robotiq 3-Finger Gripper to accurately predict if a grasp will be stable. But, in some cases, they found that the Robotiq Gripper was too good for their research!

Imagine that you are presented with a table full of everyday objects that you've never seen before. It wouldn't be a stretch of the imagination to say that you'd know how to pick those objects up, right? You wouldn't even give it a second thought. As soon as you touched an object, and probably even before, you would know how to pick it up and whether your grasp would be stable.

Traditionally, such simple tasks have been pretty much impossible for robotic systems. It is, therefore, the sort of problem that makes for quite challenging research. How do you reliably grasp unknown objects and ensure that the grasps are stable?

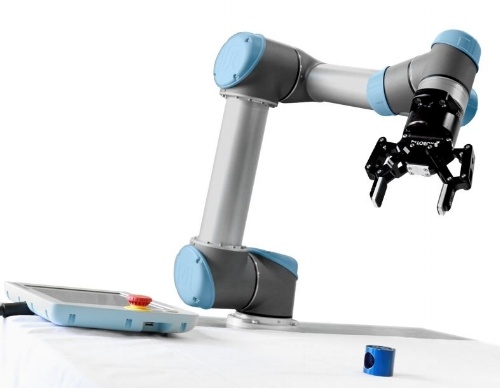

The Computer Vision and Active Perception research group from the KTH Royal Institute of Technology has investigated exactly this challenge using the Robotiq 3-Finger Gripper. In a new paper, presented at last year's International Conference on Robotics and Automation (ICRA), the researchers presented an approach to reliably detect stable grasps of unique objects using tactile sensors. In this post, we will look deeper at their paper and find out how the Robotiq Gripper contributed to the research.

What is Grasp Planning?

Grasp planning has become more important as robotic systems have grown more complex. In the early days of robotics, defining a "grasp" simply meant defining the position and orientation of the gripper. The robot would move to that position and close the gripper. Most of the time the robot didn't even detect if it had successfully picked up the object or not. The "planning" for this type of grasp was carried out by technicians by ensuring that objects were always located in the same place and oriented in the same way for the robot to pick up. This is still the common approach in many industrial applications.

Sensing technologies have improved over the years. Laser scanners and other 3D vision sensors now allow you to detect 3D points on the surface of objects without knowing the position of the object beforehand. This opens up the possibility of much more flexible grasping. Grasp planning researchers try to find ways to reliably calculate grasps from this new data.

One of the challenges of grasp planning is that your vision system will never be able to detect the whole object at once. Some of the object's faces will be hidden from view and the robot's gripper will obscure parts of the object as it moves closer to it. One popular approach for grasp planning uses "force analysis". This requires the system to store a 3D model for every object it might want to grasp. It then tries to match the detected 3D points to an object from a "dictionary" of 3D models. The system then calculates the most stable grasp using the inertial properties of the 3D model.

Why Does Grasp Planning Need Improved?

This traditional method has a few issues. Firstly, it requires that you have 3D models of every object you might want to grasp, which limits the possibility of grasping unknown objects. Secondly, it is computationally expensive to calculate optimal grasps using force analysis. For any readers who are not familiar with this research use of the term "computationally expensive," you can translate it as "takes a really really long time for the computer to calculate." This effectively means that the robot will stand around doing nothing while it works out how to pick up the object. Not a very efficient use of the robot's uptime. The researchers from KTH have attempted to solve both of these two issues.

They had solved the first issue in a previous paper, published in 2013. In it, they showed how you can effectively replace the dictionary of 3D models with a "dictionary of prototypes," where a "prototype" represents the part of the object which is suitable for grasping. This drastically reduces the required number of 3D models. For example, imagine a fork, a knife and a spoon. Traditional grasp planners might require three separate models, one for each object. With their method, you would only require one model for all three objects, as the shape of the grasp prototype (i.e. the handle) is the same. This allowed the researchers to represent the 32 household items used in their new paper with just three prototype shapes: a cylinder-like shape, a box-like shape and a sort of open-edged cylinder.

How to Detect Grasp Stability Using a Robotiq Gripper

The researchers aimed to solve the second issue in their new paper. How can you determine that a grasp is going to be stable?

Traditionally, grasp planning has been performed in an open loop manner. Stability of the grasp was calculated by the planner, but the robot would have no way of sensing if the grasp was actually stable when it picked up the object. The researchers overcame this limitation by using tactile sensors to detect the forces applied to the object by the fingers. They used machine learning techniques to teach the robot which set of tactile inputs produced stable grasps. This gives the robot the ability to decide if a grasp will be stable or not before it picks up an object, which is pretty useful.

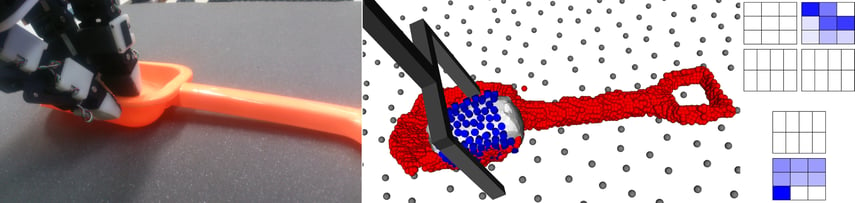

To do this, the researchers attached a set of thirteen tactile sensors to the fingers and palm of a Robotiq 3-Finger Gripper. They told us that the Robotiq Gripper was a great choice because of its good dexterity-to-price ratio, its impressive mechanical compliance and, most importantly for this research, because it offered an interesting variety of grasping scenarios.

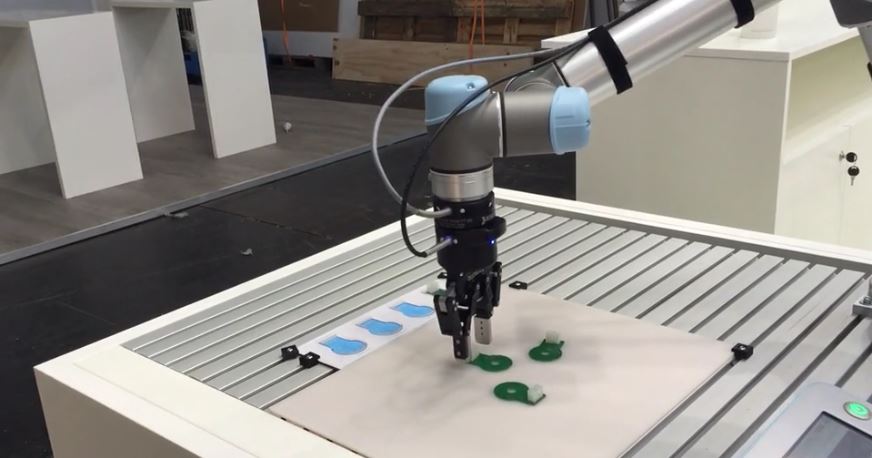

The research described in the paper involved using 32 household items. Each one was placed in front of the robot, which grasped the object as calculated by the grasp planner for its prototype shape. The robot picked up the object and the researchers applied a force to the object (i.e. pushed it) to verify that it was firmly gripped. If not, the grasp was marked as "unstable". When the researchers had gathered 64 trials for each prototype shape (32 successful and 32 unsuccessful), they used the data to train three machine learning classifiers, one for each prototype.

The Robotiq Hand Was Too Good!

The Robotiq Hand Was Too Good!

The researchers only focused on "precision grasps" for this study. This is where objects are pinched between the tips of the robot's fingers (e.g. picking up a pencil). The alternative type of grasp is a "power grasp" (e.g. holding the pencil in your fist). The researchers had intended to test their approach using power grasps as well. However, in the end this wasn't possible because the Robotiq 3-Finger Gripper was too successful when lifting objects using power grasps.

Machine learning algorithms need a good balance of successful and unsuccessful examples in their training data. Without this balance, the classifier won't be able to effectively learn what it is about a grasp which makes it stable, and it will perform badly. The Robotiq 3-Finger Gripper was able to perform stable power grasps most of the time. Although this wasn't so helpful for the study, it provides a great lesson for non-research users of the Gripper: If you want to ensure a secure grasp using the 3-Finger Gripper, use a power grasp.

The Results of the Study

The main purpose of this research study was to demonstrate that it is possible to classify whether a grasp will be "stable" or "unstable" by using the data from tactile sensors in the robot's fingers. The researchers also wanted to show that you can improve the performance of such a classifier by using shape prototypes. The results of the experiment showed that their method was able to correctly predict stability of a grasp in 89% of the test cases, and that shape prototypes contributed to a better performance of the classifiers. For more details, you can find the original paper here.

How can you see this research being applied in your application? Do you think this would be a useful approach for your setting? What challenges can you see with it? Tell us in the comments below or join the discussion on LinkedIn, Twitter or Facebook.

Leave a comment