How Template Matching Works in Robot Vision

Posted on Jun 30, 2016 in Vision Systems

5 min read time

What does a robot vision system see when it detects an object on the production line? Computer vision systems have become both advanced and intuitive in recent years. It can be easy to forget that they are still very rudimentary compared to human vision. In this article, we look at one common approach to robot vision and how it affects the computer vision used by your robot.

Back when I was in university, I was part of a team trying to design a robot to play Jenga. One of my tasks was to develop the computer vision algorithms that would detect the tower and the blocks within it. Intuitively, this seemed to me to be quite a simple task: the tower was made of many parallel straight lines; we only needed to detect if a block was actually present, not model the accurate positions of the blocks; and the environment lighting was completely controlled by us.

However, it turned out that the task was far more complicated than I could have imagined. The straight lines between the blocks were not as clear to the camera as they were to my eyes. A hole containing a block looked exactly the same as a hole without a block when the image was reduced to detected edges. The lighting, which I had thought was controlled, turned out to be sensitive to changes in daylight.

Although the task seemed very easy to me, it was a big challenge for machine vision. This is not uncommon. When designing computer vision setups, you have to forget what your eyes see and start by thinking what the camera and algorithms actually detect.

What a Robot Doesn't See

The human visual system is quite amazing. We are able to recognize the same object under remarkably different conditions. There can be changes in lighting, texture, size, distance, shading, color, motion, and many more visual properties. We will still be able to recognize the object.

As this robotics thesis explains, our visual system is so good partly because "the hardware on the average human is excellent – high resolution color stereo cameras with built-in preprocessing." It is also effective because "this low-level information somehow gets combined with high-level domain knowledge about what [the object] generally looks like (a rough sense of a “template”)."

Robotic vision systems also use templates to detect known objects. However, they are nowhere near as advanced as the templates used in the human visual system. We are able to see that something is "a car" even if we have never seen the exact model of car before, because we are able to generalize what "carness" looks like. A robot can only see what is actually in the image.

How a Vision Sensor Detects an Object

Let's introduce the basics of a computer vision algorithm. Now, modern vision systems often use advanced combinations of different machine vision techniques. Some are impressively robust to changes in lighting, distortion, low contrast and many of the other issues that have traditionally plagued vision setups.

Let's introduce the basics of a computer vision algorithm. Now, modern vision systems often use advanced combinations of different machine vision techniques. Some are impressively robust to changes in lighting, distortion, low contrast and many of the other issues that have traditionally plagued vision setups.

In this article, we're just looking at one basic method - edge detection and template matching. Even if you're using a system with more advanced capability, you'll design a much more robust setup if you imagine your system is basic.

Edge Detection: Is There Any Object Here?

Edge detection is the first step for many machine vision systems. It involves tracing the edges within the image by detecting the borders between dark and light areas.

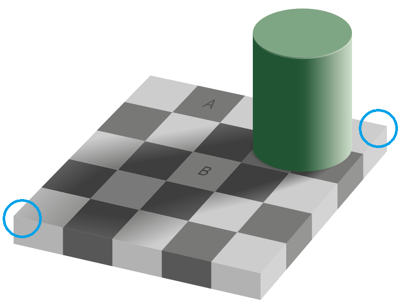

You've probably seen this image before. It's an optical illusion which demonstrates how our own visual system is good at correcting for lighting changes. Squares A and B are exactly the same shade of grey (see here for the proof) but we see B as lighter because our brain corrects for the shadow cast by the cylinder. A computer vision algorithm working on the raw grayscale image would see the squares as exactly the same. However, an edge detection algorithm would still be able to detect the lines by detecting pixels where the brightness changes sharply compared to their neighbors. In this instance, edge detection would allow the algorithm to correct for lighting inconsistencies. It would draw lines between the squares.

However, in other parts of the image, an edge detection algorithm might have problems. Consider the lines around the edge of the checkerboard, along the bottom left and right edges. The differences in brightness between the two faces are very small, so the algorithm might not detect them as edges. This is most clear if you look at the corners which I've circled in blue. The gray in both faces is almost exactly the same - just 3 brightness levels (out of 255) at the corner. We see an edge because we look at the whole shape, but the algorithm would not. Similarly, an algorithm might falsely detect an edge down the middle of the cylinder, due to the harsh shadow.

When designing vision setups for a robot, it's important to pay attention to what is actually present in the image, rather than what your own visual system sees. In future articles, we'll discuss specific steps you can take to avoid these traps. For now, just remember - don't trust your own eyes.

Template Matching: Is this Really My Object?

The next step taken by many robotic vision systems is template matching. This involves taking a template image of the object and trying to find areas of the current image which are similar to the template. Template matching algorithms allow you to detect the position of the object within the current image. Some algorithms can also detect rotated objects, scaled objects and even objects with distortion or occlusion.

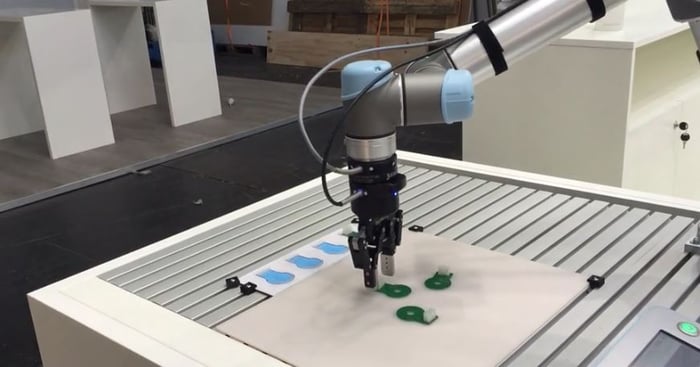

In robot vision systems, setting the template image is often as simple as putting the object under the camera then dragging a box around the object using a graphical user interface. The system will save the template image and use it as a reference to find the object in future images.

The most basic method of template matching is to directly compare the grayscale images, without using edge detection. For example, if you were trying to detect a circuit board in a collaborative pick-and-place operation, you would take a cropped image of a single circuit board as the template. During operation, the template matching algorithm would analyze the current camera image to find areas which are similar to the template. This basic approach is quite limited. For one thing, it is not robust to inconsistent changes in brightness within the image. Using a vision sensor with an integrated light source would help this by providing consistent lighting.

A slightly more advanced form of template matching is to extract the edges in the image, as described above. The object edges are extracted from the template image. This outline is then matched to the edges in the current camera image. This is more robust to changes in lighting, although the algorithm can still be fooled by harsh shadows, lines in the background and distortion.

In future posts, we will look at how to design vision setups which get the most from template matching algorithms. For now, just remember that clear template images, with well defined edges and consistent lighting, will improve your object detection. By remembering how computer vision works, you can ensure that your vision setup is as robust as possible.

Do you have any questions about template matching? How could you integrate vision into your robot application? Which vision systems have you used? Tell us in the comments below or join the discussion on LinkedIn, Twitter or Facebook.

Leave a comment