Human-Robot Collaboration - Collaborative Robots and Vision Systems

Posted on Jul 15, 2014 8:00 AM. 4 min read time

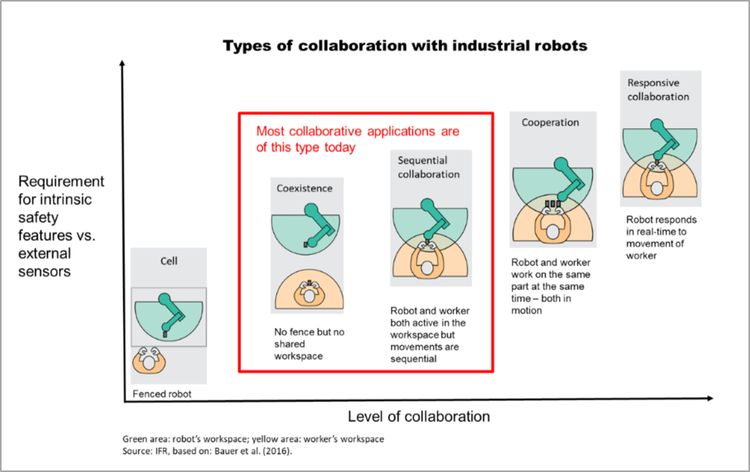

In the short term history of robotics, a few key points have been observed about the integration of robots in the work place. The first point we will look at is rigid automation, which consists of a fixed path followed by an industrial robot. This kind of automation is still used in manufacturing lines where security devices are implanted or where heavy loads are transported in the robot working area. The security features are usually security cages and pressure sensors that can stop the robot if an impact is detected.

In the past couple of years, collaborative robots have seen an incredible rise in the robotics market. These kinds of robots are used for applications that require humans working along with robots. They are mainly used for assembly tasks with low payloads. The security features of collaborative robots are highly sensitive pressure sensors that detect a human presence. Those robots are able to learn and to adapt their path to their environment. In brief, collaborative robots don’t need protective cages because they use different security devices and because of the low speed at which they function. This is key to future human-robot collaboration.

Robot Security 2.0

The next iteration in robotic security is the use of 3D cameras in the robot environment. This device allows the robot to not only be stopped when an impact is felt by the pressure sensor, but also prevents impact by anticipating the motion. In fact, with projects such as the ''Vision Guided Motion Control for Industrial Robots'', this idea is now a reality. This project is led by the Collaborative Advanced Robotics and Intelligence System Laboratory (CARIS) out of UBC (University of British-Columbia). In the following video a Kuka –a collaborative robot paired with a Robotiq 3-Finger Adaptive Gripper, is filmed with a 3D camera that gives instant feedback to the robot arm.

As you can see in the video, the camera is able to recognize the human or the object in the trajectory of the robot. This project is also able to change the path of the collaborative robot while it is executing the task. The robot can change paths a certain number of times until it reaches its final goal. The collaborative robot can also detect fast movement, such as a part falling down in the robot path. This integration allows for a more open work space, as well as the avoidance of any kind of impact between the robot and the worker. As you can see in the demonstration, even with collaborative robots, small impacts can occur without 3D vision. If the 3D vision is incorporated into the robot cell, no impact can happen because of the prediction algorithm. This application can also be applied to industrial robots and eventually reduce or even remove security devices in order to give a greater liberty to the robot and reduce its footprint on the shop floor. For more information on project CARIS.

Next Level of Human-Robot Collaboration

The next step of human-robot collaboration is to allow the human worker to control the robot without a teach pendant or controller. In fact, the ''Collaborative, Human-focused, Assistive Robotics for Manufacturing'' (CHARM) project is able to identify different human motions and react to these movements. The vision of the project is that future industrial robots will assist people in the workplace, support workers in a variety of tasks, improve manufacturing quality and processes, and increase productivity. CHARM is a multi-institutional project involving UBC (University of British-Columbia), Laval University and McGill University. All three universities bring different knowledge and ideas to the project.

In the following video, the worker is entering into a workspace that is predetermined by the program. The 3D camera is able to detect a certain number of gestures that are correlated into the robot motion. For example, a gesture in the direction of the robot with the right hand means that the worker is requesting a part, so the collaborative robot brings the parts to the appropriate point with a speed that is fully secure for the worker.

Some gestures are used for a deficient part and others to signal to the collaborative robot to take corrective action. The 3D vision system is also able to recognize different working zones. This means that the robot can stand in a different working position at the same station. The project is supported by GM Canada. This software/ hardware is dedicated to enhance productivity in the automotive industry by developing ways to make the human-robot collaboration effortless. For further information on CHARM.

Even if these projects are relatively advanced, we will have to wait a couple of years before seeing them in action in real assembly lines. I am sure, however, that the robotic industry is waiting eagerly to see these applications introduced into their workshops and labs. Stay in touch with these projects and make sure you keep following the evolution of this technology for human-robot collaboration.

Leave a comment