Would You Trust Your Washing Machine in a Fire?

Posted on Apr 25, 2016 7:00 AM. 6 min read time

There have been a few high profile warnings over the past year about artificial intelligence and robotics. Elon Musk, Stephen Hawking and Bill Gates have all weighed in to warn against the dangers of advanced AI. But, one new study should make us reconsider the most disconcerting aspect of intelligent robots, and it's far closer to home.

We've been talking quite a lot about programming in these past few weeks here on the Robotiq blog. As roboticists, we all know that programming is what makes our robots work. We understand that it's our own choices in programming that decide how a robot acts. However, other members of the wider public don't necessarily have this understanding of robotics. Fueled by news stories that robots will take over the world or artificial intelligence is a threat to humanity, many people might actually believe that modern robots are reaching the stage of development that is equivalent to the Terminator.

If you're anything like me, you'll occasionally find yourself in a discussion with someone who knows nothing about robotics and worries that: "Robots are going to take over! They are Evil." They might not use these words exactly, and they might be half joking, but this is their general line of argument.

It can be tempting to just ignore these discussions and quickly steer the conversation onto another topic. However, as a roboticist you are in a valuable position, with your knowledge, you have the potential to put that person's mind at rest and change their perception of robotics completely. In some ways, it is our duty to assuage the fears of the general public, just as it could be the duty of an aeronautics engineer to explain the safety of passenger aircraft to someone who is afraid of flying.

In this article, we're going to discuss why people's perceptions of robot intelligence might be far more dangerous than robots could ever be themselves. We'll discover how, by educating people, you might even save their life.

Why people are afraid of a robot uprising

If you do meet someone who is genuinely concerned about AI or robotics, the first thing to do is to show them you understand why they are concerned. People are generally fearful of things they don't understand. Robotics is a pretty advanced field, so it's reasonable that people don't understand it. For example, most people don't realize the significant difference between robotics and artificial intelligence.

Over the last couple of years, some pretty high profile people have started to talk seriously about the dangers of AI. In 2014, Stephen Hawking said that AI could bring about the end of mankind. Then last year, Elon Musk and Bill Gates both expressed their concern about its "existential threat to humanity" in an open letter with MIT researchers. While they're mostly talking about the future development of some "Super AI”, such stories have spread far and wide in the media. Readers might not be paying particular attention to the subtleties in what is actually being said. The concern isn’t that AI has already gotten out of hand, mostly these discussions are trying to encourage regulation.

Who has moral responsibility, the robot or the robot programmer?

One thing which confuses the issue is the popular debate around the question: Can an artificial intelligence have morals? People are understandably excited by the idea because it raises a load of interesting philosophical questions. A recent article over on Phys.org even asked if robotic politicians would be more effective than human politicians, as they would be ethically unbiased. Robot ethics is a topic we've covered before.

It's certainly an intriguing debate. However, could too much discussion around "moral robots" give people a distorted view of the capabilities of robots?

The other day I attended a discussion in the Edinburgh International Science Festival, titled “The Rights of The Machine”. The topic of discussion was whether artificially intelligent robots should be granted legal rights, i.e. robot rights like human rights or animal rights. Although the premise of the event was perhaps a bit sensationalist, the discussion itself brought up a lot of reasonable considerations. For one thing, someone raised the point that the morals of a computer program are essentially the morals of the programmer. This is even true for self-learning algorithms which have to be heavily constrained if they are to be useful at all, as we discussed recently regarding Alpha Go.

As programmers ourselves, we understand just how limited a computer program really is. The general public, however, doesn't have our insight. They are likely to believe a robot is more capable than it really is, which turns out can be potentially fatal.

Would you trust your washing machine in a fire?

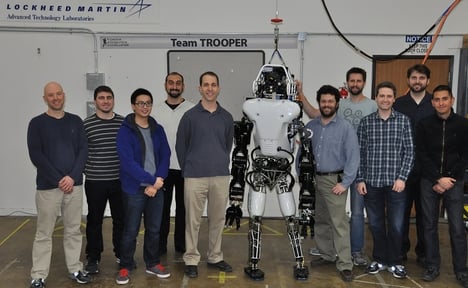

In March this year, a surprising paper was presented at the International Conference on Human-Robot Interaction. It described a study in which 42 volunteers where instructed to follow a robot into a room. Then, without them knowing, a simulated fire emergency was initiated. The robot lit up and displayed a sign reading "Emergency Guide Robot." As the researchers had hoped, the participants followed the robot as it, supposedly, led them to safety. But, there was a catch! The robot was terrible at its job. It repeatedly directed the volunteers into danger instead of away from it, guided them round in circles and led them away from the clearly marked exit signs.

Of course, the robot was really being remotely controlled by the experimenters. They wanted to find out how much people would trust a robot in an emergency situation. The results surprised the researchers completely. Despite the fact that the robot repeatedly proved itself to be untrustworthy, the volunteers continued to follow the robot's instructions. They ignored the well-lit "Fire Exit" sign and followed the robot into darkened rooms, towards smoke and even obeyed the robot's instructions when they had previously been told by the experimenters that it was broken.

The results of the experiment are, admittedly, a little funny. However, on another level, they are also deeply worrying. Clearly the participants viewed the robot as a figure of authority, and this image wasn't broken when the robot proved itself faulty. I wonder if the participants did this because they didn't really understand the robot's capabilities. When placed in a similar situation, I imagine that you or I (as we have experience with robots) would be quite quick to distrust a dodgy robot. So why did the participants trust it?

Without an accurate mental model of how a piece of technology is supposed to work, we have little way of telling if it is faulty or not. We trust our washing machine as long as it cleans our clothes properly, but as soon as it stops performing that function we declare it broken because we know how it's supposed to work. We certainly wouldn't trust our washing machine to save us in a fire, not even if it was a smart washing machine. However, what level of artificial intelligence would it take for us to trust an AI over our own common sense? Have you ever followed the GPS in your car along a totally the wrong route? The problem might be closer than we think.

The real concern of intelligent robots probably isn't the development of some "Super AI" which develops a desire to kill us. The bigger worry is that people will misunderstand the capabilities of robots and AI, so that they wrongly entrust their lives to them. How can we solve this? By educating people about robots in a realistic way, and explaining their limitations, which is something we all can do.

Have you ever had to explain robot limitations to a non-roboticist? What did you tell them? How you think we could educate people about robot capabilities? Tell us in the comments below or join the discussion on LinkedIn, Twitter or Facebook.

Leave a comment