Key takeaways from Humanoids Summit Silicon Valley 2025

Posted on Dec 18, 2025 in Automation, Palletizing

5 min read time

Data, Deployment, and the Real Path to Physical AI

The Humanoids Summit made one thing very clear: progress in humanoid robotics isn’t being limited by ambition, but instead by data, reliability, and deployment reality.

Across talks, demos, and hallway conversations, a consistent theme emerged. The industry is no longer asking if humanoids will work, but how to train them, evaluate them, and deploy them safely at scale.

Here’s what stood out most.

The real bottleneck: Data

Everyone agrees that high-quality data is the foundation of Physical AI. The nuance isn’t about whether to collect a certain type of data; teams want as much as they can get. The difference is in how they allocate resources across the data spectrum, because each layer comes with its own cost, difficulty, and payoff.

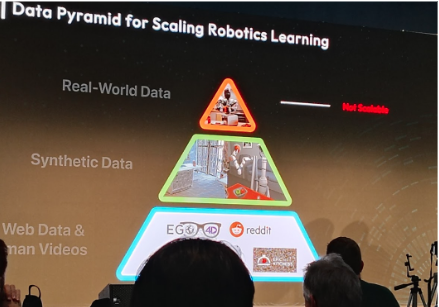

Most teams described some version of a “data pyramid”:

1. Real robot deployment

This is the gold standard. Real robots performing real tasks generate the most transferable data. The problem?

It doesn’t scale.

Deployments are expensive, slow, and constrained by hardware availability. Even the most advanced teams can only collect so much data this way.

2. Teleoperation

Teleop is becoming a key middle ground. Some innovations seen were using digital teleoperation along with real world teleoperation.

We spoke with several startups working on this layer:

- Contact CI with haptic gloves

- Lightwheel, enabling large-scale digital teleoperation

- Labryinth AI, VR-based approaches translating human motion into robot joint data

Teleop data is more scalable than full deployment, but still resource-intensive.

3. Human-centered data (video, motion capture)

This is the most abundant…and the least transferable.

Human video datasets are widely available, but translating them into reliable robot behavior remains challenging.

The emerging consensus?

Most teams are training models first on large-scale human data, then fine-tuning with teleop and real deployment data. It’s a pragmatic approach to a difficult scaling problem.

The open question remains:

Do humanoids need billions of data points—or trillions? And how efficiently can that data be converted into useful behavior? Will new algorithms grounded in physics and kinematics alleviate the data dependency problem?

Two competing philosophies: Generalization vs deployment

Another major divide at the summit centered on where to focus effort.

The “Generalizable Model” Camp

Companies like Skild AI, Galbot, and others are betting on large, foundational models that can generalize across many tasks. They are playing the long game: building massive datasets, simulation pipelines, and broad reasoning capabilities.

The upside is clear: long-term flexibility.

The risk is just as clear: long timelines, high burn rates, and limited near-term deployment.

The “Reliable Deployment” Camp

Other companies are prioritizing application-ready humanoids:

- Agility

- Field AI

- Persona

- torqueAGI

These teams are focusing on reliability, safety, and narrow but valuable use cases. Agility stood out by having humanoids working in warehouses for real clients.

Their message was consistent:

If the robot isn’t reliable, a human has to supervise it, and then the ROI disappears.

World models, foundational models, and a missing piece: Evaluation

Many speakers focused on the emergence of World Foundation Models—systems with broad ability to understand physical interactions. The conversation centered around figuring out the best way to build and train them: what data they need, how they generalize across environments, and how much physical interaction is required to learn meaningful behaviors.

High-fidelity world models are hard to build because they require extremely accurate physical data. Even harder? Evaluating progress.

Right now, there’s no standard way to measure whether a world model is truly improving real-world task performance. NVIDIA’s upcoming evaluation arenas were mentioned as a promising step, but this remains an open challenge.

Where humanoids actually make sense today

Agility presented one of the clearest frameworks for humanoid value:

Humanoids shine where you need:

- Mobility in cluttered, changing environments

- Flexibility to rotate between multiple tasks

- Dynamic stability to pick, lift, and move payloads from awkward positions

One compelling example was using a humanoid to link two semi-fixed but unstructured systems—like moving goods from a shelf on an AMR to a conveyor. These are workflows that are awkward for traditional robots but natural for human-shaped machines.

Deployment reality: Configuration, reliability, safety

Several themes came up repeatedly when discussing real-world deployment:

- Configurability: If deployment isn’t straightforward, you lose flexibility—the core humanoid value proposition.

- Reliability: Unreliable robots simply shift work instead of eliminating it.

- Safety: At scale, humanoids must be robustly safe.

These challenges mirror what manufacturers already know from collaborative automation: technology only creates value when it works consistently, safely, and predictably.

Hands vs grippers: A surprising consensus

One of the most animated debates was about hands versus grippers.

Despite impressive demos of anthropomorphic hands, most practitioners were candid:

- Hands are hard to control

- They are difficult to deploy reliably

- Dexterity adds significant complexity

The prevailing view was pragmatic:

Grippers (especially bimanual setups) will dominate in the near term.

They solve the majority of manipulation tasks with far less complexity. Dexterous hands may arrive later, but grasping comes first.

That said, interest in tactile sensing was strong. Researchers and companies are exploring:

- How to structure tactile and haptic data

- What robots should actually measure

- How to visualize and use contact information effectively

What this means for Robotiq

From a Robotiq perspective, a few conclusions stand out:

- The humanoid ecosystem needs feature-dense, scalable, reliable hardware

- Ease of integration, from hardware to software and communication is essential, which is where Robotiq’s plug-and-play mentality fits nicely

- Grippers will remain central to real-world Physical AI in the near term

- Force-torque and tactile sensing are increasingly relevant, from humanoids to prosthetics

- Customization (fingertips, form factors) will matter for emerging manipulation tasks like scooping or cloth handling

Perhaps most importantly, the summit reinforced a familiar lesson: automation succeeds when it moves from impressive demos to operational reliability.

Final takeaway

Humanoid robotics is progressing rapidly—but not linearly. The companies making real progress are the ones grappling seriously with data quality, deployment constraints, and safety at scale.

The future of Physical AI won’t be decided by the flashiest demo. It will be decided by who can deliver reliable systems, trained on the right data, solving real problems—day after day.

That’s where humanoids stop being research projects and start becoming tools.

1_2026_Siemens_UR_demo_CES2026.gif)

Leave a comment