Robotiq brings the sense of touch to Physical AI

Posted on Jan 27, 2026 in Robot Grippers

4 min read time

Physical AI has reached a critical point. Robots can see, plan, and decide better than ever—but manipulation in the real world is still the bottleneck.

Robots can see objects with impressive accuracy, yet still drop them, crush them, or fail to adapt when contact doesn’t go as planned. The limitation isn’t compute or models. It’s the lack of touch.

Real-world learning requires contact awareness. Force. Slip. Interaction feedback. Without those signals, robots are forced to guess at the most critical moment—when they actually touch the world.

That’s why Robotiq is introducing tactile sensor fingertips for the 2F-85 Adaptive Gripper, bringing high-frequency tactile sensing to a proven manipulation platform already used at scale.

Why vision alone isn't enough

Vision is powerful before contact. After contact, it quickly loses relevance.

Objects deform. Fingers occlude the camera. Micro-slips happen faster than vision can detect. For Physical AI systems trying to generalize across objects and environments, this creates unstable learning and inconsistent outcomes.

Touch changes the equation.

With tactile feedback, robots can:

- Understand how force is distributed across the grasp

- Detect slip as it begins, not after failure

- Adapt grip strategy in real time

- Generate richer, more reliable datasets for learning

This isn’t about adding another sensor. It’s about giving robots access to the same class of information humans rely on to manipulate the physical world.

Adaptive gripping meets tactile sensing

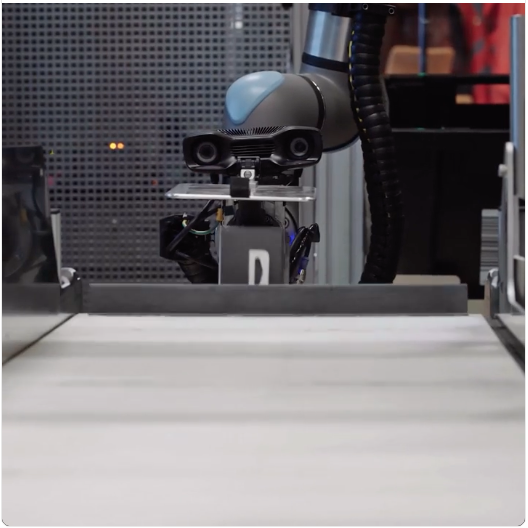

Robotiq’s 2F-85 Adaptive Gripper was designed to reduce dependence on perfect perception. Its patented mechanical architecture enables both pinch and encompassing grasps, allowing the gripper to conform to object geometry rather than forcing rigid alignment.

That adaptability already makes it well suited for general-purpose manipulation.

The new tactile sensor fingertips extend that capability by adding a dense sensing layer directly at the point of contact, including:

- A 4×7 static taxel grid to measure force distribution

- High frequency Dynamic feedback at 1000 Hz for vibrations and slip detection

- An integrated IMU for proprioceptive sensing and contact awareness

Together, these signals allow robots to reason about contact geometry and interaction dynamics—capabilities that are critical for Physical AI systems learning from real-world experience.

Built for fleets, not fragile demos

Many tactile solutions today are custom-built, fragile, and difficult to maintain. They work in controlled demos, but break down when scaled across dozens or hundreds of robots.

Robotiq takes a different approach.

The tactile-enabled 2F grippers are designed for repeatable, long-term deployment, building on hardware that is already operating globally in demanding industrial and research environments. Thousands of Robotiq grippers run daily with high uptime, predictable performance, and low total cost of ownership.

The tactile fingertips integrate directly with existing 2F-85 grippers using native RS-485 communication and a USB conversion board. They preserve the gripper’s pinch and encompassing grip mechanics with minimal impact on stroke and reach, and feature robust cabling designed for real-world operation.

The result is a manipulation platform that can move from lab pilots to large fleets without a complete hardware redesign.

Physical AI-ready from training to deployment

Physical AI workflows demand consistency.

For reinforcement learning, imitation learning, and vision-language-action models, noisy or inconsistent contact data can slow progress and destabilize training. Hardware variability becomes a hidden tax on every experiment.

Robotiq addresses this by standardizing both manipulation hardware and tactile sensing across fleets. The tactile sensor fingertips are designed to produce stable, repeatable signals, and Robotiq provides guidance on tactile data handling—including bias management, normalization, and outlier detection—to help teams generate high-quality datasets.

By reducing integration friction and hardware variability, teams can focus on learning algorithms instead of constantly compensating for hardware edge cases.

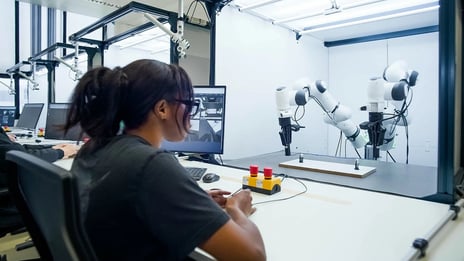

Proven by leading AI and robotics teams

With more than 23,000 grippers deployed worldwide, Robotiq’s manipulation technology is already trusted by leading manufacturers and AI labs. The tactile sensor fingertips build on that foundation, extending a field-proven platform into the next phase of Physical AI development.

As Aleksei Filippov, Head of Business Development at Yango Tech Robotics, puts it:

“To build physical AI that truly works, you need hardware that can sense, respond, and learn from every interaction. With Robotiq’s precision force control and reliable feedback, we capture rich sensory data from every grasp.”

Compared to DIY tactile hands that take months to develop and maintain, Robotiq offers a ready-to-deploy solution. And compared to anthropomorphic hands that add cost and complexity, the tactile-enabled 2F gripper achieves the majority of real-world manipulation tasks with far lower risk.

Enabling the next phase of Physical AI

Physical AI doesn’t scale on clever algorithms alone. It scales on reliable interaction with the real world.

By combining adaptive gripping, high-frequency tactile sensing, and industrial-grade reliability, Robotiq gives robots the sense of touch they need to learn faster, operate more robustly, and move beyond isolated demos.

From AI training labs to humanoid platforms preparing for real deployment, tactile-enabled manipulation is no longer optional. It’s infrastructure.

And that’s exactly how Robotiq is building it.

.jpg)

Leave a comment