15 Mistakes People Make With Cobot Safety

Posted on Nov 06, 2018 in Collaborative Robots

6 min read time

Even collaborative robots can have safety problems, but only if you make a mistake. Here are 15 common mistakes that people make with cobot safety.

Safety around robots: you need to get it right. When safety procedures aren't followed, people have been known to die.

Thankfully, collaborative robots have reduced a lot of the hazards involved with robots working alongside humans. However, they are not inherently safe in all situations. When you make mistakes, even cobots can be dangerous.

Here are 15 common mistakes that people make with cobot safety. Avoid these and you'll be fine.

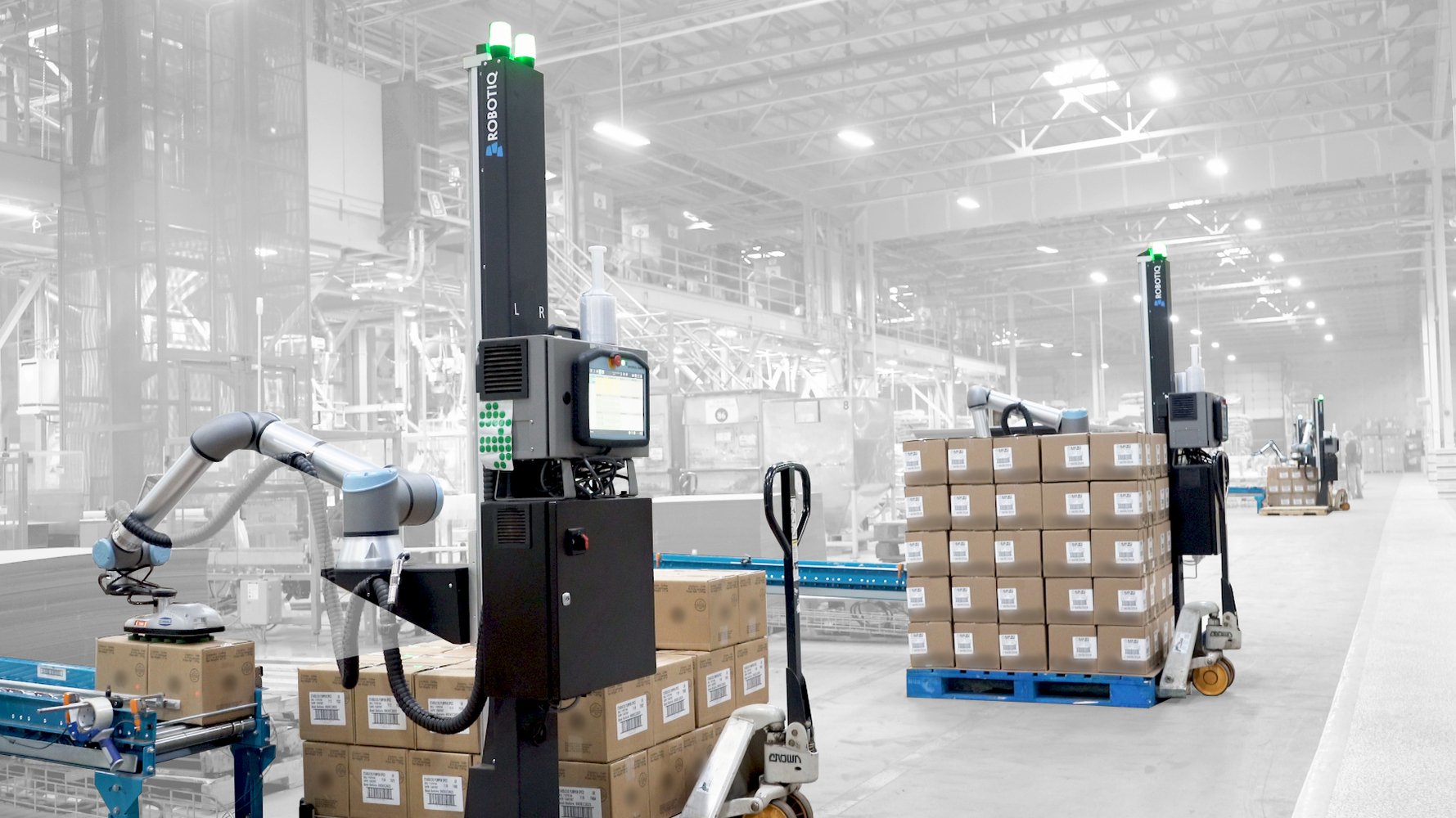

An operator using Robotiq's path recording at Saint-Gobain Factory in Northern France

1. Assuming that cobots are inherently safe

Yes, cobots are a safe alternative to traditional industrial robots. They are designed to operate around humans with no need for additional precautions. However, this doesn't mean that they are inherently safe. You need to assess them on a case-by-case basis.

Some people mistakenly assume that cobots are safe in all situations. As a result, they can end up performing dangerous actions with the robot without even realizing.

2. Not doing a risk assessment

The key to being safe with cobots is to do a good risk assessment. You need to properly assess all aspects of the task and judge its overall safety.

We have an eBook devoted to this: How to Perform a Risk Assessment for Collaborative Robots.

Unfortunately, some people think that they can bypass the risk assessment when it comes to cobots. This is not a good idea.

3. Over-simplifying the risk assessment

One of the most common pitfalls we see with cobots is to over-simplify the risk assessment. Some people roughly outline the task but don't go into enough detail to identify the potential safety issues.

This is not just a problem with cobots. If you treat "filling out a form" as a risk assessment, it can be an issue in any situation.

4. Over-complicating the risk assessment

The other common pitfall that we see is the exact opposite: over-complicating the risk assessment. Some people try to specify every tiny detail of the task and end up not being able to "see the wood for the trees."

Include only as much detail as necessary but not too much.

5. Only considering risk from one activity

Robot applications include many different steps. All of these can affect the safety of the overall task. It can be easy to forget about the potential risks caused when, for example, the robot is moving between tasks. You need consider risks in all activities the robot will perform and movements it will make.

6. Only considering expected operation

Safety problems often occur when something unexpected happens. For example, when a human worker drops a tool into the safeguarded space of the robot and reaches inside to pick it up. Nobody expected this to happen, but suddenly the person is at risk.

Consider both expected and unexpected events when doing your risk assessment.

7. Failing to account for end effectors

The safety limits of collaborative robots only apply to the manipulator itself. However, the end effector you choose can hugely impact the safety of the robot. If you don't account for it, there could be serious safety implications.

An extreme example would be a welding end effector. Obviously, you would not want anyone to be near the robot when the welder was active.

8. Failing to account for objects

It's easy to forget that any objects held by the robot will also affect the safety. Jeff Burnstein of A3 talked about this in his keynote at our Robotiq User Conference this year. He used the example of a collaborative robot holding a knife. Even though the robot itself is safe, the knife certainly is not.

Safety issues can also arise when the robot holds long objects — which reach outside its workspace — and heavy objects — which could fall and cause damage.

9. Not involving the team

Safety is only possible when everyone who will be using the robot is involved. If a risk assessment is created by one person in a far-away office, it won't be much use to anyone. The members of your team have a unique perspective on the task and should be included at all stages.

10. Using a risk assessment to justify a decision

A risk assessment should be an objective look at the activity from the perspective of safety. Unfortunately, sometimes people use them to justify decisions which have already been made.

For example, a person might have decided they don't want to pay for expensive safety sensors so they use the risk assessment to show that one is not needed. The problem with using risk assessments in this way is that it stops you from thinking clearly and makes it likely that you will miss some unsafe behavior.

11. Not considering ALARA

Sometimes, people find one or two potential risks, implement some risk controls, and then just leave it at that. Because they have found a few risks, they don't look for more.

ALARA stands for "As Low As Reasonably Achievable." It's a core concept of safety which means that you should reduce the risk of an activity as much as you can. This means that you should consider all of the risks of a robot application, not just one or two.

12. Using "reverse ALARA" arguments

This is related to the previous two mistakes. A "reverse ALARA" argument is one which uses risk assessment (or some other analysis, e.g. cost-benefit analysis) to show that safety measures should be reduced. Usually the justification is that the system still conforms to legal safety values.

The problem with this is that it directly goes against the principle of ALARA, hence the name.

13. Not linking hazards with controls

A good risk assessment identifies which parts of the robot task are most likely to cause hazards. However, this is not the end of the risk assessment. You also need to say how you will mitigate these hazards with controls.

Then — and this is a part people sometimes forget — you need to link each hazard to a control. If you don't do this, it's very difficult to tell if all your hazards have been addressed.

14. Not keeping risk assessment up to date

Risk assessments are only useful if they apply to the real robotic system as it is now. If your risk assessment was written 2 years ago, it's likely that the robot has changed in that time and it is no longer up to date.

Collaborative robots change all the time. It's easy to reprogram them, add extra end effectors, and move them to a completely new task. As a result, your risk assessment should be regularly updated.

15. Telling nobody about the proposed measures

Safety measures are useless if you don't tell anyone about them. Too often, risk assessments are compiled, printed out, put into a drawer, and forgotten.

Make sure that your risk assessment is available to everyone and that everyone is aware of the safety measures. Read our eBook How to Perform a Risk Assessment for Collaborative Robots for more information.

What mistakes have you seen people making with robot safety? Tell us in the comments below or join the discussion on LinkedIn, Twitter, Facebook or the DoF professional robotics community.

Leave a comment