Top 10 Challenges for Robot Vision

Posted on Nov 20, 2017 in Vision Systems

5 min read time

Robot vision solutions are getting easier and easier to use. Even so, there are several things which make it tricky. Here are our top ten challenges for implementing robot vision.

We know that robot vision can improve your automation setup. Integrated robotic solutions give you the benefits of robotic vision quickly and easily, without needing programming skills. However, even with improved technology, vision is one of the more "tricky" aspects of robotics to get right.

Several factors affect robot vision in the environment, task setup and workplace. You can only correct for these factors if you know what they are, so here are 10 of our "favorite" robot vision challenges.

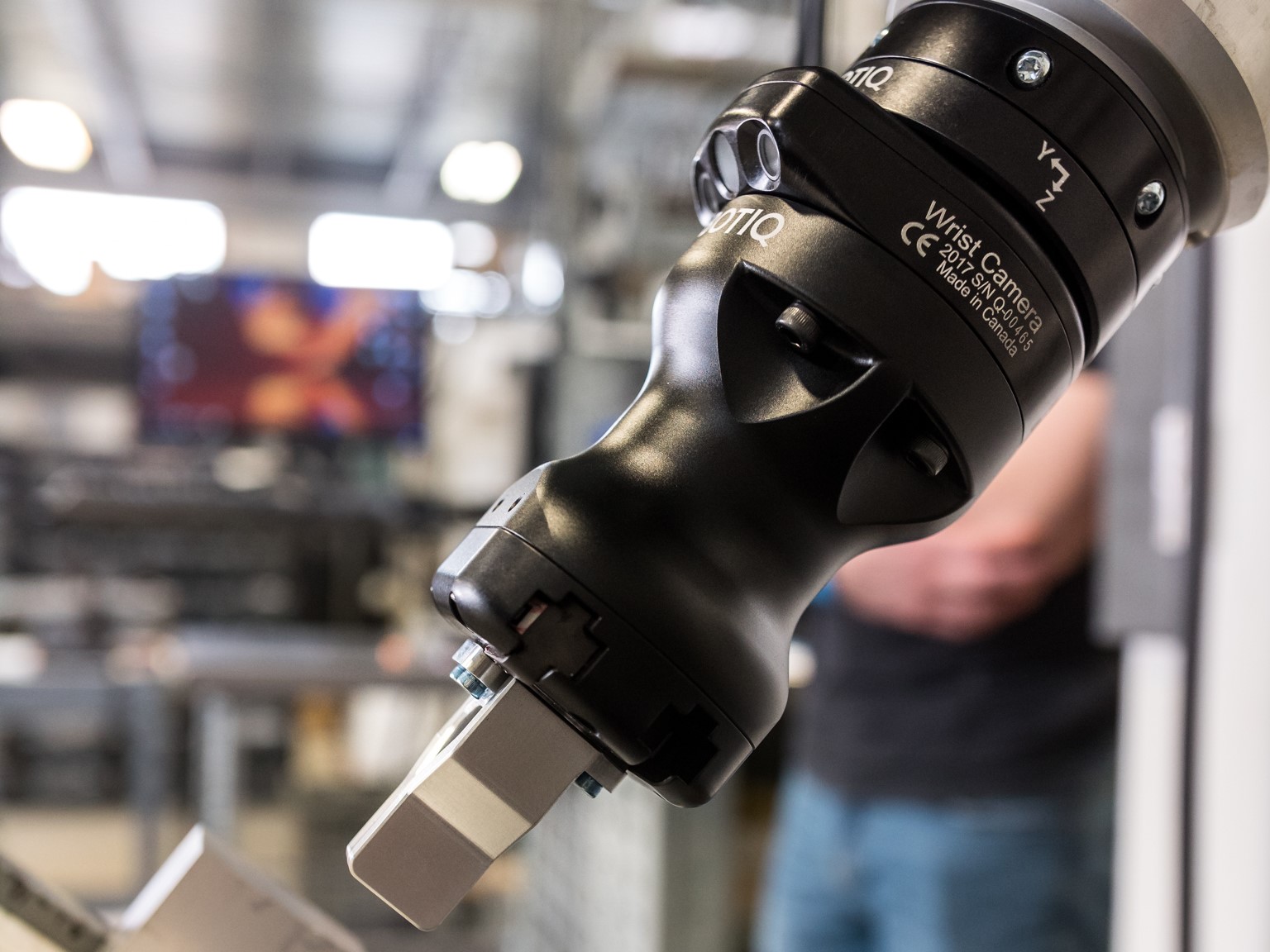

.jpg?width=640&name=2F85-Wrist-Camera-Machine-Tending-Walt-Machine-47%20(1).jpg) The most common function of a robot vision system is to detect the position and orientation of a known object

The most common function of a robot vision system is to detect the position and orientation of a known object

1. Lighting

If you have ever tried to take a digital photo in low light, you will know that lighting is essential. Bad lighting can ruin everything. Imaging sensors are not as adaptable or as sensitive as the human eye. With the wrong type of lighting, a vision sensor will be unable to reliably detect objects.

There are various ways of overcoming the lighting challenge. One way is to incorporate active lighting into the vision sensor itself. This is the approach we use in the Robotiq Camera. Other solutions include using infra-red lighting, fixed lighting in the environment or technologies that use other forms of light, such as lasers.

2. Deformation or Articulation

A ball is a simple object to detect with a computer vision setup. You could just detect its circular outline, perhaps using a template matching algorithm. However, if the ball was squashed it would change shape and the same method would no longer work. This is deformation. It can cause considerable problems for some robotic vision techniques.

Articulation is similar and refers to deformations which are caused by movable joints. For example, the shape of your arm changes when you bend it at the elbow. The individual links (bones) stay the same shape but the outline is deformed. As many vision algorithms use the outline of a shape, articulation makes object recognition more difficult.

3. Position and Orientation

The most common function of a robot vision system is to detect the position and orientation of a known object. Therefore, the challenges surrounding both have usually been overcome in most integrated vision solutions.

Detecting an object's position is usually straightforward, as long as the entire object can be viewed within the camera image (see "Occlusion" to find out what happens if parts of the object are missing). Many systems are also robust to changes in orientation of the object. However, not all orientations are equal. While it's simple enough to detect an object which is rotated along one axis, it is more complex to detect when an object has experienced various 3D rotations.

4. Background

The background of an image has a huge effect on how easy the object is to detect. Imagine an extreme example, where the object is placed on a sheet of paper on which is printed images of that same object. In this situation, it might be impossible for the robot vision setup to determine which was the real object.

The perfect background will be blank and provide a good contrast with the detected objects. Its exact properties will be dependent on which vision detection algorithms are being used. If an edge-detector is used then backgrounds should not contain sharp lines. The color and brightness of the background should also be different from the color and brightness of the object.

5. Occlusion

Occlusion means that part of the object is covered up. With the previous four challenges, the whole object is present in the camera image. Occlusion is different because part of the object is missing. A vision system obviously cannot detect something which is not present in the image.

There are various things which could cause occlusion, including: other objects, parts of the robot or bad placement of the camera. Methods to overcome occlusion often involve matching the visible parts of the object to a known model of it and assuming that the hidden part of the object is present.

6. Scale

In some situations, the human eye is easily tricked by differences in scale. Robot vision systems can also become confused by them. Imagine that you have two objects which are exactly the same except that one is bigger than the other. Imagine you are using a fixed, 2D vision setup and the size of the object determines its distance from the robot. If you trained the system to recognize the smaller object, it would mistakenly detect that the two objects were identical and that the larger object was closer to the camera.

Another issue of scale, which is perhaps less obvious, is that of pixel values. If the robot camera is placed far away, the object will be represented by fewer pixels in the image. Image processing algorithms work better when there are more pixels representing the object, with a few exceptions.

7. Camera Placement

Incorrect camera placement can cause any of the previous issues, so it is important to get it right. You should try to set up the camera in areas with good lighting in a position which provides a clear view of the objects with no deformation and as close to the objects as possible without causing occlusions. There should be no distracting backgrounds or other objects between the camera and the viewing surface.

8. Movement

Movement can sometimes cause problems for a computer vision setup, especially when blurring occurs in the image. This might happen, for example, with an object on a fast moving conveyor. Digital imaging sensors capture the image over a short period of time, they do not capture the whole image instantaneously. If an object is moving too quickly during the capture, it will result in a blurry image. Our eyes may not notice the blur in the video, but the algorithm will. Robot vision works best when there is a clear, static image.

9. Expectations

The final two challenges relate more more to your approach to vision setups than technical aspects of vision algorithms. One of the biggest challenges to robot vision is simply that workforce has unrealistic expectations about what a vision system can provide. By ensuring that expectations match the capabilities of the technology, you will get the most from the technology. You can achieve this by ensuring that the workforce is educated about the vision system, which leads us to...

10. Knowledge

Knowledge is the key to making the most of any technology. One way to kick-start your knowledge of robot vision is to use some of the resources which we provide here on the Robotiq Blog. Another great place to start is our new eBook "Adding Extra Sensors: How to Do Even More With Collaborative Robots"

Which of these problems have you encountered when using robot vision? How did you overcome them? Can you think of any other challenges which we have missed? Tell us in the comments below or join the discussion on LinkedIn, Twitter or Facebook.

Leave a comment