Is Verbal Programming Really Feasible?

Posted on Apr 13, 2016 in Robot Programming

5 min read time

How long will it be before we can tell robots "go and do this task for me"? Will programming ever be so easy, or will we always have to program robots point-by-point? In this article, we find out what's involved in verbal programming to see how close we are to using it, and whether we'd even want it.

Incorporating language into programming has long been a goal of robotics research. Just recently, researchers from Sheffield Robotics announced their new development in swarm robotics - automatic programming using a form of linguistics. These linguistics were not what we'd usually think of as "natural language", as the robots themselves were the ones doing the talking. Each robot in the swarm uses the "language" to program each other. However, the story started me wondering:

Just how close are we to being able to say "Robot, go and do this task for me."

Verbal Programming: A Long-Term Goal

Back in 2012, Google researcher Ni Lao wrote a report which discussed how "programming by demonstration" has been a long-term goal of computing. As far back as 1966, people had the idea of programming complex tasks using simple language. Lao said that, in 2012 at least, there were no popular, commercial successes using programming by demonstration. He gave examples, including: automatic programming techniques using CAD, extraction of learning data from email/web information, and robot programming using speech recognition.

Back in 2012, Google researcher Ni Lao wrote a report which discussed how "programming by demonstration" has been a long-term goal of computing. As far back as 1966, people had the idea of programming complex tasks using simple language. Lao said that, in 2012 at least, there were no popular, commercial successes using programming by demonstration. He gave examples, including: automatic programming techniques using CAD, extraction of learning data from email/web information, and robot programming using speech recognition.

Since that report was written, programming by demonstration has finally started to find its feet in commercial robotics. Some of his other examples have also advanced since then. Thanks to the rise in collaborative robotics, physical programming by demonstration has already arrived. It's also making its way into non-industrial applications. The Moley robotic chef, which we discussed last year, is a great example of programming by demonstration in consumer robotics. It uses motion capture as an input method.

Lao considers verbal programming to be a subset of programming by demonstration. Since programming by demonstration is now used in industry, why has verbal programming still not arrived?

The Challenges of Verbal Programming: I Can't Hear You & I Don't Understand You

It can be tempting to say that the technology is just not good enough yet for voice recognition in robotics, but is that really the case?

My smart phone is an amazing piece of technology. I can talk to it and, as long as I choose my words carefully, it understands me. I can say: "How do I get the bus from home to my office?" It will reply: "From opposite the primary school, take the 36 bus North departing in 8 minutes." That's really impressive. I've been following the progress of voice recognition since I got my first home computer over a decade and a half ago. Now, for the first time, I feel like voice recognition actually works.

Surely if voice recognition algorithms can work in mobile phones, they could work for robots too?

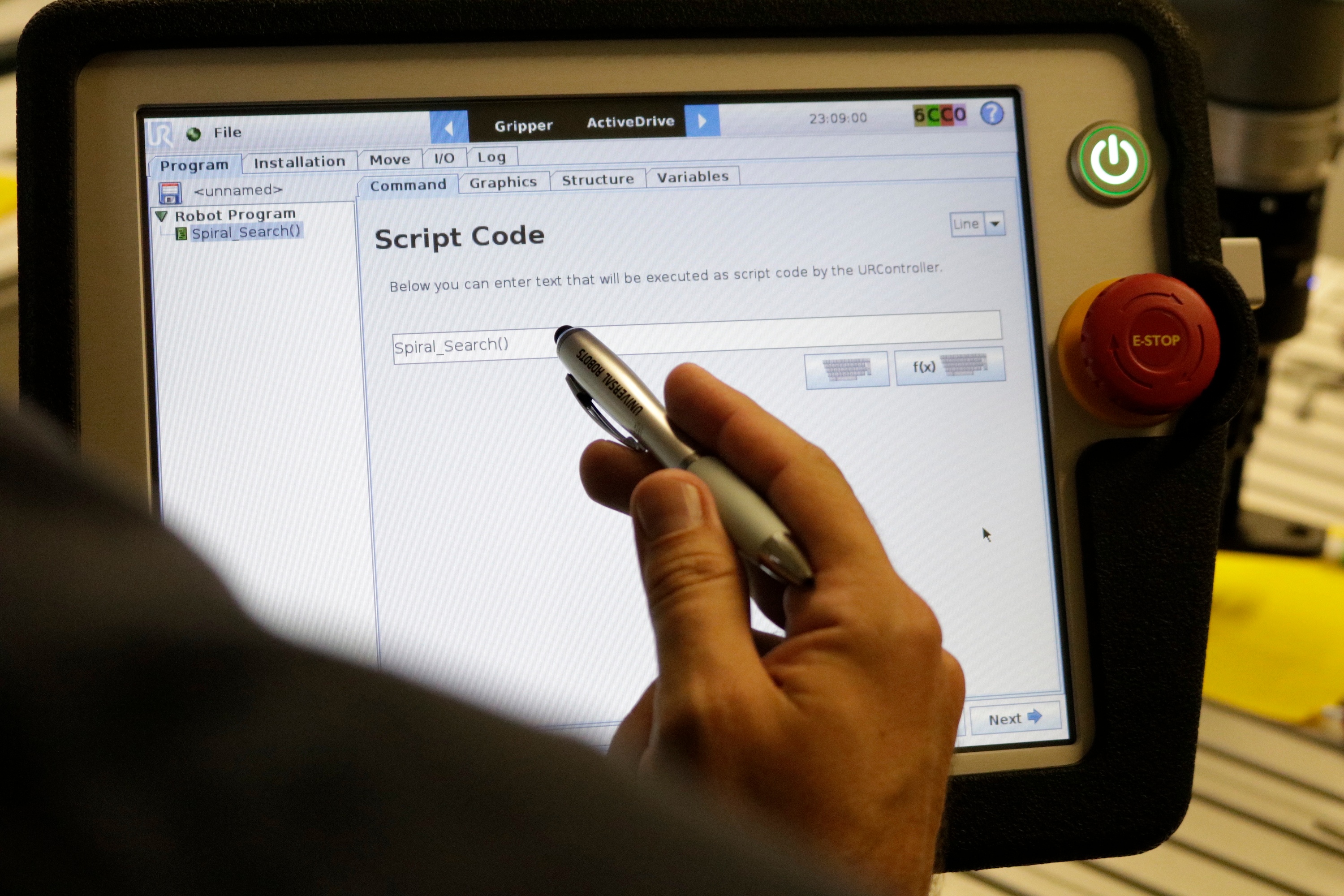

Researchers certainly think so, and have thought so for a long time. One paper in 2002 suggested that teach pendants were a thing of the past and that multi-modal programming, using voice and gesture, was the future. That was 14 years ago, and teach pendants are still very much part of the present. Multi-modal programming has stayed firmly within research laboratories.

The challenges for robotics might become clearer if we consider that voice recognition consists of two stages:

- Understanding the words.

- Extracting commands from them and knowing what to do with those commands.

My phone is very good at the first stage, but its use of the second stage is fairly rudimentary. Most of the words it detects are simply used as a search term for Google. A small number of the commands will require extra actions, like opening an external app to play music. With industrial robotics, however, most of the challenges come in the second stage - knowing what to do with the commands. For one thing, what level of abstraction do we want?

Where Do We Want Voice Programming?

You could feasibly program a robot task at any level of abstraction. However, at lower levels of abstraction, voice programming would be more of a nuisance than it would be useful. For example, telling a manipulator to "move left 2cm then up 0.5cm" would be inefficient; it would be better to just move the robot by hand. On another level, being able to say "deburr Part A then screw it into Part B" could be useful, but since the level of abstraction is far higher, we have less control over how the robot carries out the task.

It seems imperative that we work out at what level of abstraction we would want to use verbal programming; that is, if we need to use it at all (and we might not). Technicians might prefer programming by physically moving the robot, even if it took more time than programming with verbal commands. We would also have to work out what type of commands to give the robot.

In Ni Lao's report, he split verbal programming into three parts:

- Command sentences ("Do this.")

- Programming sentences ("This is how to do this.")

- Feedback sentences ("You did that wrongly/correctly." or "Stop!")

It seems likely that, if industrial robots are to incorporate voice recognition at all, it would have to be in a limited way. Command sentences, at the highest level, could represent very complex sequences of actions. For example, you could invoke several high level actions by saying “pick up Part A from here and put it down here”. At the lowest level, voice commands could represent very simple actions from the "programming sentence" standpoint. For example, this could offer a "hands-free" way to save the position of an end effector by simply saying "store" (this position) after having moved the robot.

Would these kinds of applications use voice recognition technology to its full potential? No, not really. However, I find it hard to imagine robotic technicians being happy to program a robot with the same level of uncertain error that you get when dictating a text message to your mobile. Voice commands might have to stay basic if they are to be both reliable and useful to us.

Can you imagine using verbal programming in your application? What do you think the benefits/disadvantages would be? What type of commands can you imagine assigning to verbal programming? Tell us in the comments below or join the discussion on LinkedIn, Twitter or Facebook.

Leave a comment