Choosing the Correct 2D Vision System for Your Application

Posted on Nov 16, 2016 in Vision Systems

3 min read time

There are two main categories of 2D sensors that you can buy: intelligent cameras (also called smart cameras) that have the sensor and processor all embedded in one casing and stand alone or independent cameras that contain only the sensor and for which the processing needs to be done on another device.

Why would you buy the latter then? It really depends on what you need. Here are some criteria to base your choice upon.

Level of Machine Vision and Programming Expertise of the User

Smart cameras tend to be easier to program than individual camera systems. They have built-in vision tools that can be easily setup for your application. Some of the systems use a simple interface where you connect to the camera using the camera’s IP setting. These systems can output what you need like pass/fail status or object localization and so on. On the other hand, cameras that are not integrated will require additional software to do the image analysis . Their advantage in providing flexibility however might be interesting, as you will see in the next section.

Image Processing

Non-integrated cameras will require an external processor to analyze the acquired images. You could plug the camera into a computer to process the images. You will probably get more processing power with a computer than with a smart camera. So using a computer should speed things up. For simple vision analysis like barcode reading, smart cameras probably have enough processing power. This is one reason why you should first determine what processing speed you need in order to properly match a system to your needs. Another image processing difference will be the flexibility you will get for your image processing. An embedded software library in a smart camera will provide you with basic image processing functions. You will have access to some filters, pattern recognition, teaching a template image, etc. For each of these functions, you will be able to configure its parameters, be it the region of interest (region of the image you want to process) or the threshold you find acceptable, etc. If you need more sophistication in your image processing, like designing your own filters for example, you will need to have access to a more flexible and advanced library. A non-integrated camera with a separate software library will offer more options as you will have access to more image processing functions and parameters.

Price

Having high resolution images with a full-blown processor sounds cool? Sure, but you’ll spend a lot more money on this kind of setup, plus you’ll need to synchronize your processor with your camera, so think twice before spending that money on a setup that might be overkill. When comparing costs from one solution to another, you need to take into account the integration time, the software setup time, and the programming time. By analyzing the complete costs of each system you will get a more accurate understanding of each system. Then you will be able to determine if a “plug & play” camera (smart camera) or a more adjustable independent camera is best for you.

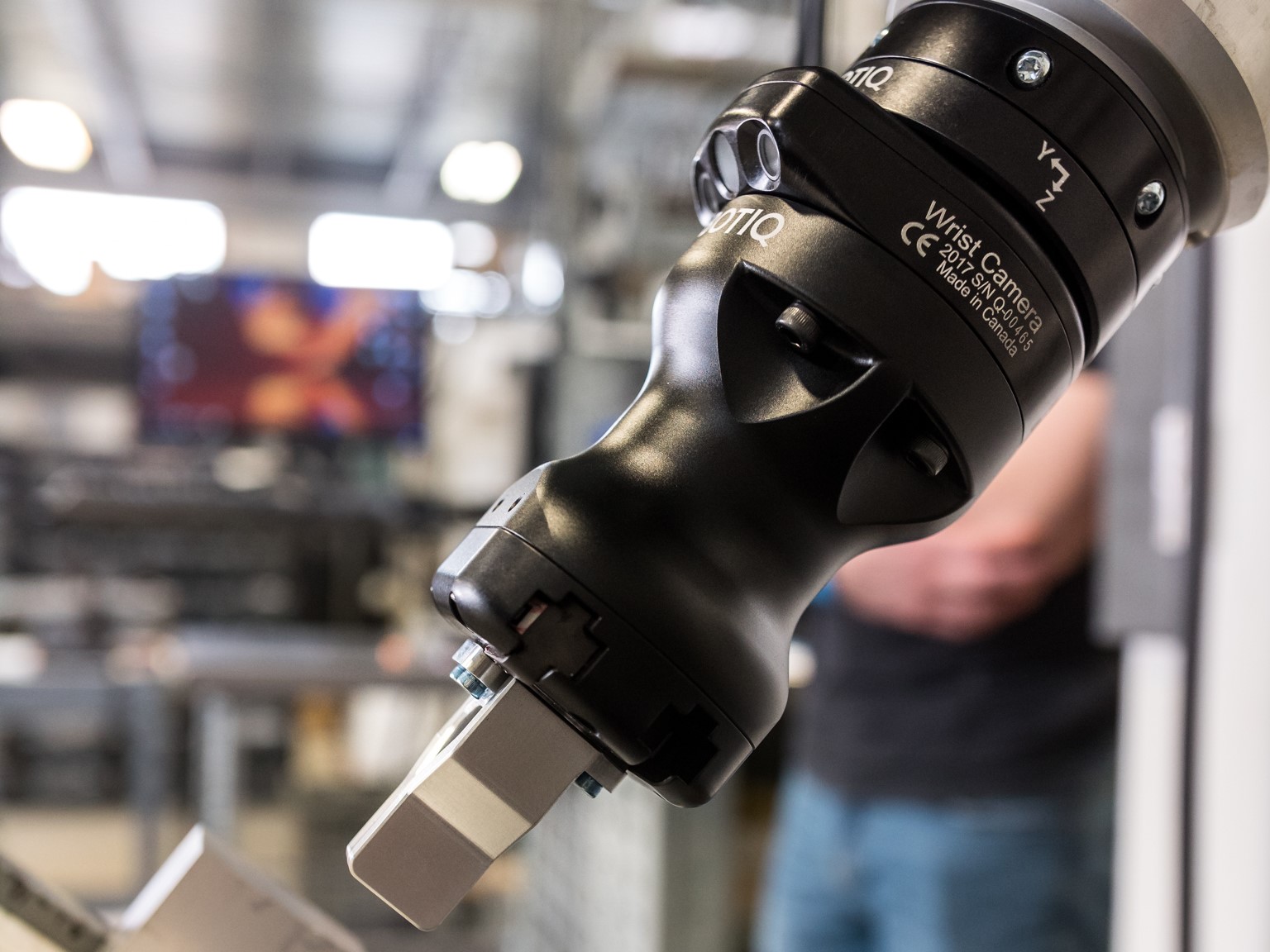

.jpg?width=470&name=Wrist%20Camera%20UR%20Best%20of%20UR%20Rsz1000%20(99).jpg)

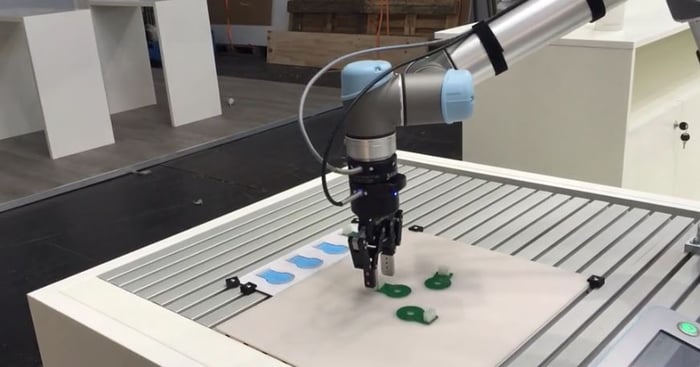

Now you might wonder: Where does Robotiq’s Camera stand in relation to the descriptions above? It is a completely integrated camera, like a smart camera. In its case, special attention has been given to its ease of use. See its specifications and get more information here. Take a look at the camera used in different applications.

Learn more on how to integrate vision systems for collaborative robots by downloading our brand new eBook. You will acquire basic knowledge of machine vision to help you figure out exactly what a simple vision application is. It might also be useful to understand the differences between this type of application and a more complex one. So, if you are just starting with vision, or if you think adding vision to your system might solve one of your pet peeves, this eBook will be a great place to start.

Leave a comment