What Is Robot Benchmarking?

Technical industries often have well established benchmarks, but not robotics. We introduce some promising new benchmarks for robotic manipulators and discuss why task-based benchmarks have been uncommon in robotics.

Most technical fields use benchmarking. We use it directly, to test our solutions against the solutions of others. We use it indirectly, when deciding which new technology to buy.

Benchmarks give us a simple, clear indication of whether a technology or solution is suitable for our needs. Or do they? Are benchmarks really clear and simple? There has long been a lack of benchmarks in many areas of robotics. Task-based benchmarks are even more uncommon. Several benchmarks have been proposed, but they are still few and far between.

Recently, a team of researchers at Yale announced their proposal for a suite of task-based benchmarks for robotic manipulation. The test suite contains of 77 objects and can fit in a suitcase. The idea is that the objects are incorporated into several benchmarking tasks and a robot's performance is measured depending on which tasks it can successfully complete.

Task-based benchmarks for robots are few and far between. In this article, we discuss what they are and why they are necessary for the future of robotics.

Why Are Task-Based Benchmarks Uncommon?

The news caught my attention because I also developed a task-based robotic benchmark back when I did my PhD thesis in 2014. I am always supportive of this type of benchmark. I think they are necessary for us to be able to truly compare the performance of different robotic systems.

During my research, I discovered that fields like computer science have a very mature culture of performance benchmarking. Robotics, however, does not. So far, no task-based benchmarks have been accepted by the whole robotics community. They are uncommon in research and few of these benchmarks are ever used outside of the labs which develop them.

Why are there so few task-based benchmarks in robotics? In part, it's due to the lack of a benchmarking culture. It's also because robotic tasks are so complex.

Types of Robotic Manipulator Benchmark

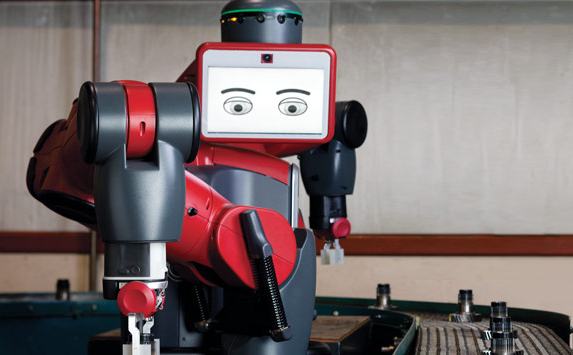

There are various ways to measure the performance of a robot, and some are more common than others. Different areas of robotics will have different benchmarking needs. It's important to clarify that I'm talking about robotic manipulators, including collaborative robots. Other robotic disciplines may have more established task-based benchmarks that I'm unaware of.

There are a few ways to measure the performance of a robotic manipulator, two of which are:

Manipulator Performance Metrics — These are well integrated into robotics. They measure the objective performance of a robot manipulator using metrics like: accuracy, repeatability, payload capacity, etc. Standards define the protocols for how these should be measured, such as the ISO Standard for Industrial Manipulators (ISO 9283). We introduce some of the most important metrics in our eBook How to Shop for a Robot.

Manipulator Performance Metrics — These are well integrated into robotics. They measure the objective performance of a robot manipulator using metrics like: accuracy, repeatability, payload capacity, etc. Standards define the protocols for how these should be measured, such as the ISO Standard for Industrial Manipulators (ISO 9283). We introduce some of the most important metrics in our eBook How to Shop for a Robot.- Task-based Benchmarks — These are the type of benchmark which are largely missing in robotics. They define tasks which demonstrate the robot's capability in specific skills. For example, assembling a particular product, pouring a glass of water, etc.

You can think of task-based benchmarks as a sort of "benchmark for the real world." They give you a more realistic picture of how the robot actually performs. Performance metrics like accuracy and repeatability are vital; however, they don't answer questions like: "Will this robot be able to assemble my product?"

The closest thing we have to a "standard task-based manipulator benchmark" is the peg-in-hole task. As the name suggests, it involves inserting a peg into a hole. However, although many research papers use it, it is not a true benchmark. In order to make it one, we would have to define a test procedure, as well as dimensions, tolerances and materials for the peg and hole. Currently, everyone has their own version of the peg-in-hole which means it's impossible to accurately compare results.

Introducing the Yale-CMU-Berkeley Object Model Set

There have been a few proposals of task-based manipulator benchmarks in the past. The most recent one from Yale does look promising. However, the Yale-CMU-Berkeley (YCB) Object and Model Set will have to be accepted by the wider robotics community. Traditionally, this has been a challenge because research groups who are interested in benchmarking often try to introduce their own benchmarks. With so few people using them, the benchmarks never reach the "critical mass" required for the benchmarks to become de-facto standards.

The YCB Object Model Set includes a set of objects and, most importantly, test protocols for various manipulation tasks. The project's website provides 3D models of all 77 models and five benchmarking protocols. Objects are grouped into five categories: food items, kitchen items, tool items, shape items, and task items. The last category includes a version of the peg-in-hole task.

This benchmark suite is far more developed than most of the ones I have come across before. However, unless the researchers can convince other research labs to use the YCB, it will disappear like others before it. The team clearly recognize this because they have sent their test suite to over 100 robotics research labs around the world. I think this is a very clever move and I wish them the best of luck.

Task-Based Benchmarks for Collaborative Robots

Collaborative robots introduce even more challenges for task-based benchmarking. Not only do benchmarks need to test the robot's performance, they should also test its safety features and abilities to collaborate.

The National Institute of Standards and Technology (NIST) is currently developing a set of benchmarking protocols for collaborative robots. This will include:

- Test methods and metrics.

- Collaborative task decompositions.

- Protocols for robot-robot and human-robot collaboration.

- Tests of the robot's situational awareness.

The project began in 2013 and is due to be completed in 2018.

This is an impressive set of goals and it will be interesting to see how the team achieves each of them. It is certainly true that the market needs this type of benchmarking, though it might need it sooner than 2018 given the popularity of collaborative robots.

Get Started Benchmarking Your Robot

Until an accepted collaborative robot benchmark comes along, it is down to us to benchmark our own robots. Robotics research labs have developed their own, internal, benchmarks for years. Manufacturing companies can do the same, selecting tasks in their process as benchmarks for the robot performance. In the next post, we will discuss how to do this.

How do you measure the performance of your robot? Have you used any de-facto or other standards to test your robot? What type of benchmarks would you like to see in robotics? Tell us in the comments below or join the discussion on LinkedIn, Twitter, Facebook or the DoF professional robotics community.

Leave a comment