How to Benchmark a Robot Application in 10 Steps

You develop a new application for your robot, or modify an existing one. How do you know if you have improved the process? What if you've made it worse? Benchmarking gives us an objective way to measure the success of our robot applications, but if we don't follow the right process the results can be misleading. In this article, we explain how to benchmark properly to improve our robotic applications.

There are very few established, task-based benchmarks in collaborative robotics. As we discussed in the previous article, this is partly due to the lack of a benchmarking culture in robotic research. Whatever the reason, it means that it is our own responsibility to create benchmarks for our robotic applications, whether we are in industry or in research.

Task-based benchmarking involves testing the performance of a robotic system for a particular task. In an ideal world, we would perform this type of test every time we made a significant change to the robot setup or programming. We should also do it when we create a new robot application for an existing process.

For example, let's say I am already using a collaborative robot to pick-and-place circuit boards. The operation is too slow for me and I decide that I'm going to change the setup to speed it up. So, I go onto the DoF forum and ask the community for some suggestions. They give me several good options, all of which work for my application. But, now I'm stuck. How do I decide between the solutions? I need to turn the task into a "task-based benchmark" so that I can compare the solutions objectively.

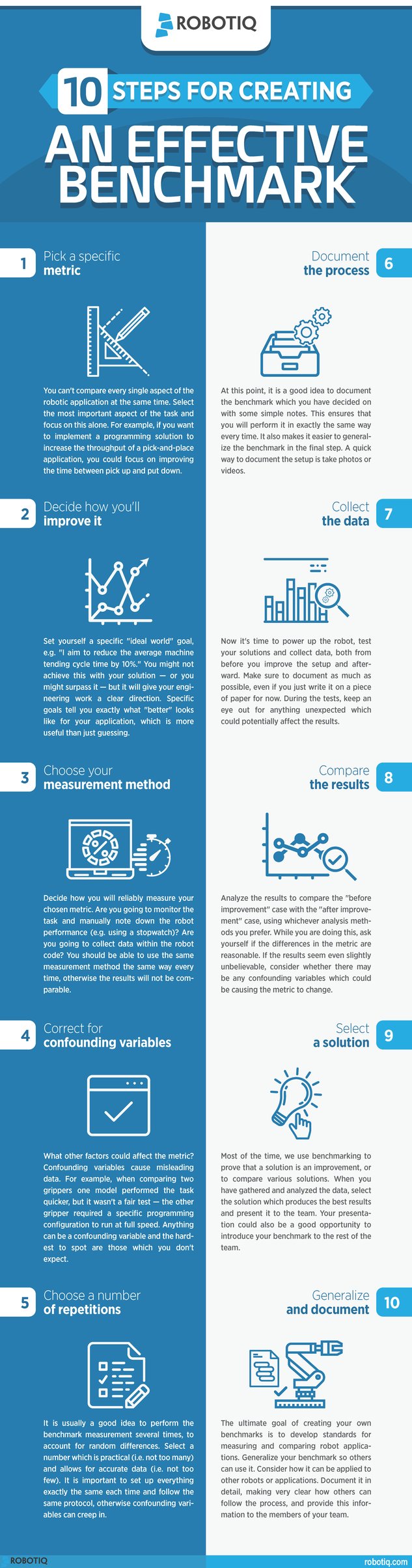

Although the idea of comparing solutions might seem like common sense, many of us don't follow a clear benchmarking process and end up with misleading data. Here is one possible process which you can follow.

10 Steps for Creating an Effective Benchmark

This process describes how to use benchmarks to test improvements to your existing robotic setup; for example, when you change robot programming and want to check if the robot performs better, the same, or worse than it did before. If instead you want to test a brand new robot application, use the same process but compare between the "with robot" and "without robot" cases.

During my PhD, I studied some task-based benchmarks from the field of occupational therapy. These are tests which therapists use to determine, for example, a person's loss of dexterity due to Parkinson's Disease. In the process, I learned that task-based benchmarks are often more complex than they first appear. Even apparently simple tasks — like stacking blocks on top of each other — must follow a strictly controlled process, otherwise the results can become meaningless due to "confounding variables."

When you are using this process to develop your own benchmarks, keep all possible aspects of the robotic setup exactly the same. This way you can ensure that confounding variables don't result in bad data.

Follow the Principles of Good Experimental Design

In some ways, defining your own benchmarks is a similar process to designing a research experiment. You can find out more about the principles of experimental design in our article How to Design a Robotics Experiment in 5 Steps and learn how to form a solid experimental hypothesis in our article This Single Statement Generates Great Robotics Research.

How do you compare the performance of your robot applications? What effects could benchmarking have on your business? Which parts of your work do you already measure with metrics? Tell us in the comments below or join the discussion on LinkedIn, Twitter, Facebook or the DoF professional robotics community.

Leave a comment