How to Be a Great Robot Programmer

Posted on Apr 06, 2016 in Robot Programming

6 min read time

What makes a great robot programmer? In this post we ask why it's challenging to accurately comprehend the limitations of a robot, and why this skill is necessary to maximize the robot’s capabilities when programming. We find out why humans are not great at imagining the world "thorough the eyes of the robot" and give a simple technique you can use to improve your understanding of their capabilities.

Do you know the one thing that makes you a great robot programmer? It's not your skills in advanced mathematics; it's not your ability to code ultra-efficient algorithms; and it's not even your familiarity with programming languages (although obviously these can all be quite helpful). When programming physical robots, one thing is often far more important.

Great robot programmers are able to comprehend how the robot perceives the world, and program effectively within the robot's limitations.

How Do We Perceive the World?

Humans are remarkably adept at understanding a machine with a limited set of sensors, as long as that machine is simple. A small child is very quick to learn that a mobile robot will turn around when they press one of its whisker/switches. However, when the sensors and the robot get more complex, it can be challenging to mentally picture the world from the robot's perspective.

The challenge comes, in part, because we humans are unconscious of how we ourselves perceive the world. Our brains do all the computation automatically, so we don't have to worry about how we process our "sensor data" or how we coordinate our joints. The path of perception and action in both humans and robots is sort of similar, as you can see in these (very simplified) representations:

Humans: World -> Senses -> Unconscious Brain (Sense information -> Mental model of world -> Decision -> Action instructions) -> Muscles -> World

Robots: World -> Sensors -> Computer (Sensor data -> Virtual model of world -> Task coding -> Low-level electronics code) -> Actuators -> World

Both the human brain and the computer incorporate data from the senses or sensors into a virtual model of the world. In robots, this virtual model is going to be the robot's code, which might be represented as a 3D model. In humans, the virtual copy of our sense data is called a percept. We are completely unconscious that these percepts even exist. Instead, we feel like we perceive and act on the world directly.

Programming a robot effectively means taking your mind out of its comfort zone. It means starting to think consciously about some task you usually perform unconsciously - i.e. noticing the limits of your senses and movement in the world. It also means imagining what it's like to lose some of your perception, as well as what it's like to gain new senses.

Seeing the World as a Robot Does

Most robots perceive the world in a very limited way. For example, even if the integrated force sensors in a robot's fingertip are very sensitive, the finger will likely be hundreds of times less sensitive than your fingertip. This is partly because the human fingertip goes through some impressive pre-processing in the brain. Many robot sensors are more accurate than human senses (which are actually quite limited), but what is missing is the advanced sensor fusion that our brain performs.

The robot may also be able to detect signals which human senses can't detect. LIDAR sensors, for example, allow robots to detect 3D point clouds of the environment. This is a sense which we would find difficult to imagine, were it not for helpful tools like RViz which allow us to render them as color.

The robot's joints and actuators are, on the whole, less flexible and adaptable than those in humans. However, they are also far more accurate than human muscles and joints. Again, it is the underlying programming that makes robots unable to match our abilities in perceiving and acting on the world.

When programming a robot, it's important to take these limitations and enhancements into consideration. None of us really believe that robots are advanced enough (yet) to match human dexterity. The challenge is to accurately comprehend the robot's abilities and limitations. Don't underestimate its capabilities, or you will not maximize the potential of the technology. Similarly, don't overestimate them or you will become frustrated that the robot isn't able to perform all of the tasks that you want it to perform.

How to See Eye-to-Eye With Your Robot

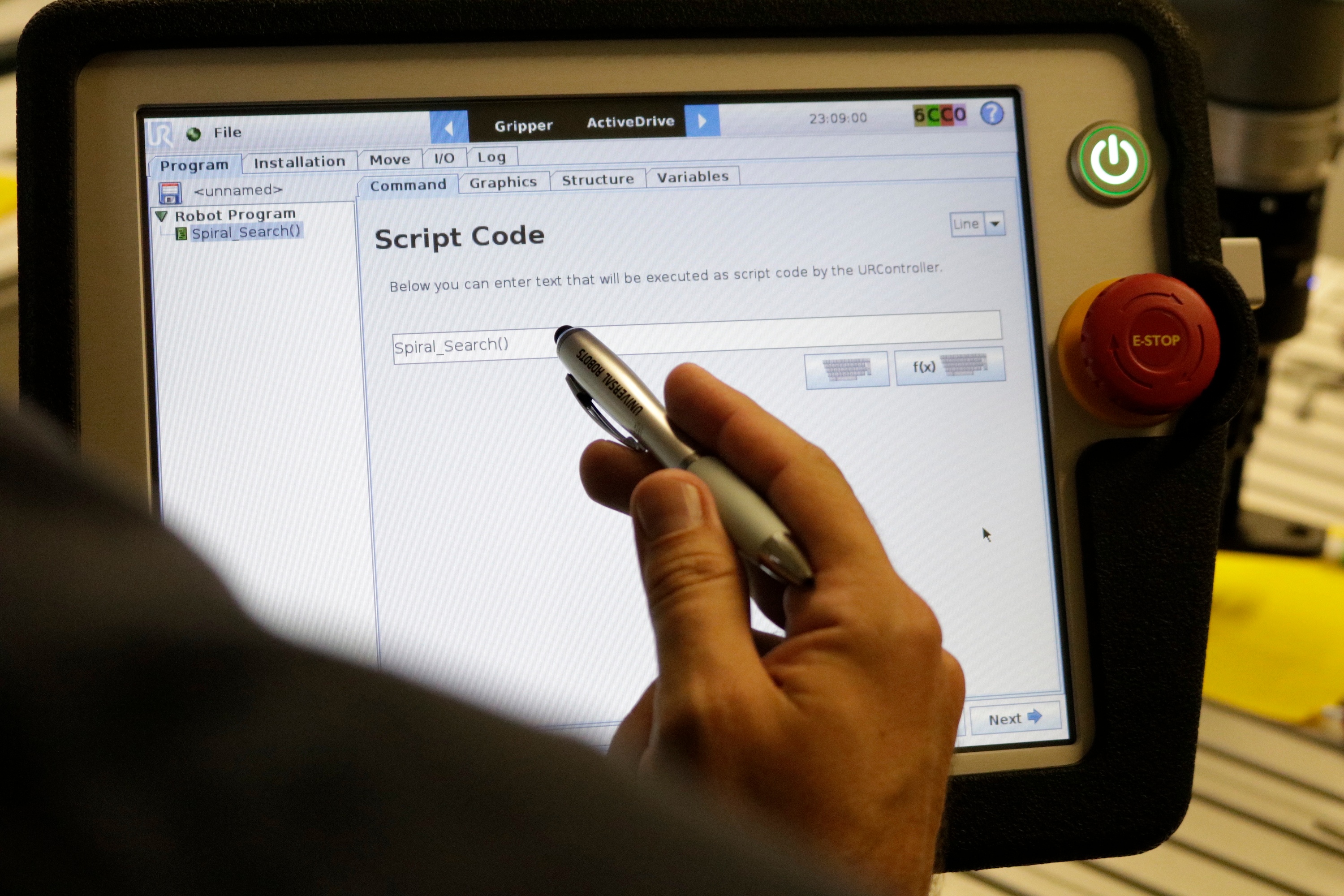

We've seen many improvements in robot programming recently.  Manufacturers and researchers are continuously developing new ways to make programming easier. Projects like Quipt are looking at how to integrate reliable sensors into industrial robots so they can be programmed by gesture, and of course some industrial robot can be taught through hand guiding.

Manufacturers and researchers are continuously developing new ways to make programming easier. Projects like Quipt are looking at how to integrate reliable sensors into industrial robots so they can be programmed by gesture, and of course some industrial robot can be taught through hand guiding.

Even with easy programming techniques like these, it's important to "bring yourself down to the robot's level". If you add new technologies to an existing robot, it's important to reconsider the robot's perception as if it were the first time you used it. This way, you won't misestimate its new capabilities. We recently showed how a 13 year old can program a UR robot. This isn't surprising, as someone that age won't have any preconceptions about the robot programming interface; they will learn the robot's capabilities and program within its limitations.

The following techniques for "seeing eye-to-eye" with your robot are very simple and quick, but effective:

- Before you start to program... stop. Put down your teach pendant or keyboard.

- Take a few minutes to physically (and mentally) walk yourself through the task. Imagine how the task is perceived by the robot, both through its sensors (if your robot has them) or by simply imagining how the task would unfold through its kinematic chain.

- If the robot does have sensors, take some time to look at the workspace through the sensors' output. If you have a camera, try performing the task by hand while only looking at the camera image on screen.

- Actively try to find the limits of your robot's capabilities, and note down how to maximize them through programming.

If you already do this before programming a robot, then great. You already have the ingredients to be a great robot programmer. If you don't carry out these steps yet, now is a great time to start. Not only will it allow you to maximize your use of the robot's capabilities, it will also help you to choose the best tasks for your robot.

How do you ensure that you maximize your robot's capabilities? Do you have any tips on how to be a better robot programmer? What do you think is the most important skill for robot programmers to have? Tell us in the comments below or join the discussion on LinkedIn, Twitter or Facebook.

Leave a comment