How Fast Is Your Collaborative Robot Getting ‘Smarter’?

Posted on Mar 27, 2017 7:00 AM. 4 min read time

One of the great things about collaborative robots is that they’re very good at learning on the job. Perhaps it’s more correct to say that the people building and programming them are constantly learning how to improve them by observing how the robots perform in factories and other production environments. These insights form the foundation of further development of technologies and systems that boost collaborative robots’ potential.

“The main driver is that robots and their tools are becoming easier to integrate and program. This has a direct effect on what tasks are easy or difficult to hand over to a collaborative robot,” Nicolas Lauzier, R&D Director at Robotiq, says.

To mention a few examples:

- Robotiq Wrist Camera makes it easy for collaborative robots to locate objects on a plane using a compact camera on the wrist of the robot.

- ArtiMinds RPS offers intuitive, easy programming of force-control applications that play a central role in connection with a number of tasks.

- Pick-it 3D vastly enhances the efficiency of bin picking applications.

Illustrating the Easy and the Hard

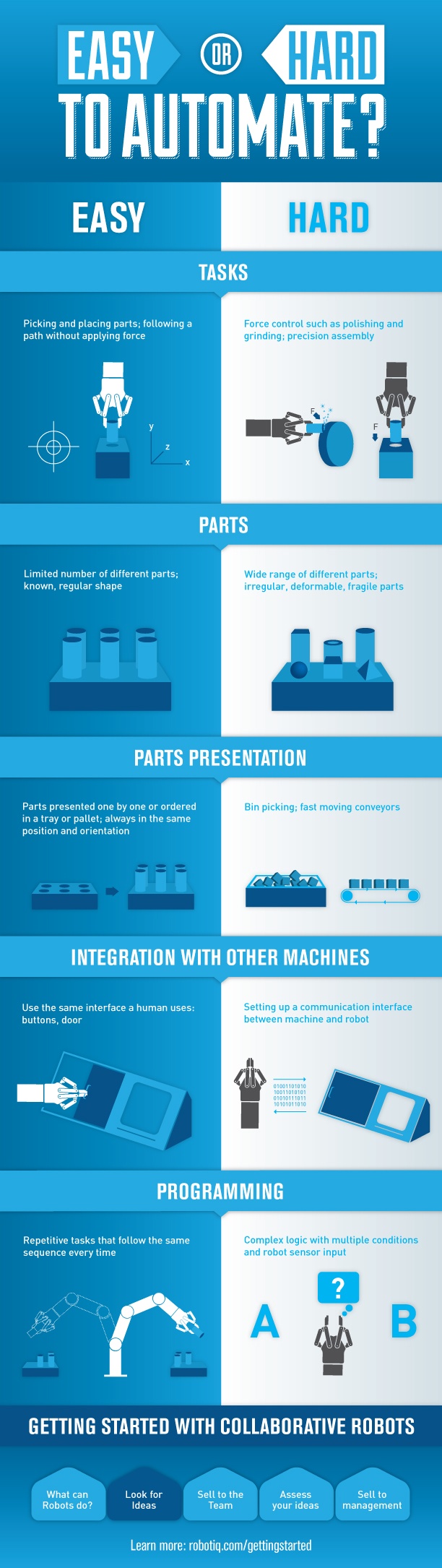

Towards the end of 2015, Robotiq published an infographic describing what tasks are generally easy – and which are difficult - to hand over to collaborative robots.

Towards the end of 2015, Robotiq published an infographic describing what tasks are generally easy – and which are difficult - to hand over to collaborative robots.

In the ‘Easy’ column were tasks such as picking and placing parts, distinguishing between a limited number of known parts, ordering shapes in a tray or a pallet and carrying out repetitive tasks that require the same steps every time.

The ‘Hard’ column was dominated by tasks where things become more varied. For example grinding with varied force, some kinds of precision assembly, distinguishing between a wide variety of shapes, bin picking different parts and carrying out tasks requiring complex logic conditions and/or many different kinds of robot sensor input.

Just two years later, the infographic serves as a great yardstick for how quickly collaborative robots are progressing and evolving.

The Grind Becomes Easier

Sometimes we forget the marvel of construction that our body is. What seems like simple tasks often requires highly advanced interaction between manipulators (for example our arms), end effectors (hands and fingers) and our sensory and software systems (senses and brain).

Tasks like grinding or polishing painted parts are prime examples. The supple manipulations of pressure, angling the polisher and knowing when to move on to a different part might seem straightforward to you and I, but not for a robot.

However, that has changed dramatically over the last couple of years. Thanks to massive advances in fields such as force control, collaborative robots from companies like Universal Robots are now capable of performing most basic forms of grinding and polishing. It should be mentioned that tasks that are very non-repetitive and require a lot of variation in pressure, like polishing car parts, are still solidly in the ‘Hard’ column.

Finding the Right Shape

As described in the free Robotiq eBook ‘Robotic Bin Picking Fundamentals’, the ability to sort a variety of unordered shapes has long been considered a holy grail of robotics.

Big advances are now being made thanks to the combination of systems like the Robotiq Wrist Camera and ArtiMinds software. Other robotics companies, such as FANUC, have also showed rapid progress.

“I would say that random bin picking is already approaching mainstream,” David Dechow, Staff Engineer-Intelligent Robotics/Machine Vision at FANUC America Corporation, recently told Robotics.org.

Intuitive Programming Part of the Key

The two areas mentioned above serve as illustrations of just how rapid collaborative robots are progressing. Similar advances are happening across the board.

Nicolas Lauzier sees intuitive programming structures and integration of sensors as central to the speed of the advances. They mean that collaborative robots can now be installed and programmed very rapidly, i.e. sometimes in just one day. In short, he sees robots as smart when they can do complex applications even when trained by non-expert humans.

“Robots become smart when they use things like force/torque sensors, cameras and software to adapt to their environment. This integration enables them to make more complex decisions,” he says.

“In the last couple of years, we have seen the rise of various technologies aimed at reducing the complexity of the integration for applications and increasingly include plug & play components, easy-to-use offline programming software and self-adapting algorithms - including deep learning. In a certain way, we can say that the robots are becoming ‘smart’ because they easily understand the non-expert human input and transfer this knowledge to execute tasks in a robust and repeatable way.”

Leave a comment